AI's Agency Comes from Us

How my experience with Tesla Full Self Driving is helping me think through teaching in the age of AI

Thank you for being here. As always, these essays are free and publicly available without a paywall. If my writing has been valuable to you, please consider sharing it with a friend or supporting me as a patron with a paid subscription. Your support helps keep my coffee cup full.

We need to talk more about generative AI and agency. There's plenty of talk about how AI agents are the next big thing in AI. But what exactly is agency? And can AI have it?

I'm certainly no philosopher, but it seems to be relatively well-established that agency requires, at minimum, intention and autonomy. In other words, you need to act (1) with purpose, not merely deterministically in response to a stimulus, and (2) without direct control of external forces.

To be honest, I'm glad I'm not a philosopher. Even without deep expertise in philosophy, it's clear that the companies building AI systems are playing fast and loose with the terminology here. For as crazy as all this drives me, I can't imagine how annoying it must be for the philosophers who think even more deeply about these ideas.

AI companies talk about their tools being able to reason, think, and write. There’s only one problem. They don't. All they do is emulate particular features of those behaviors. Reasoning is more than producing words that look like a reason. Thinking is more than processing and computation. Writing is more than extruding syntactically correct text. Both the product and process matter.

My hunch is that the same thing will be true of AI “agency.” To be sure, AI tools have at least some of the features of agency. There's no denying that they have a degree of autonomy. They're able to take action in the real world, at least in some limited ways. But intention? It seems almost definitionally true that these systems are not acting with any real intentions besides the ones we give them. Just like we should be asking ourselves if AI can really think, reason, or write, we should be asking ourselves whether AI really has agency, at least in the fullness of what that means.

The seeds of this essay were planted last Monday when I was in San Francisco for a focus group meeting on AI, but they sprouted on Friday morning.

As I prepared to go give a talk about AI, it felt fitting to hop into the Tesla Model Y I had rented on Turo for my quick trip to Texas, enter the address for the parking garage near the venue, and pull the shifter lever down a few clicks to enter Full Self Driving mode. As the car began to back out of my driveway and navigate through the neighborhood where my Airbnb was located, it felt like I was getting a glimpse of the future.

Unfortunately, this vision of the future was coupled with a number of concerns, as it should be. Just the day before, while on the plane to Austin, I had read a recent paper from a team at Microsoft analyzing the effect of AI use on critical thinking. One of the main takeaways from their study was that "higher confidence in GenAI is associated with less critical thinking, while higher self-confidence is associated with more critical thinking." In other words, who and what you trust matters.

Instead of rehashing all the details of the study, I'll point you to the paper itself and to

's excellent post about it last week over at . But I do want to take a minute to talk about what these findings mean for us as educators.

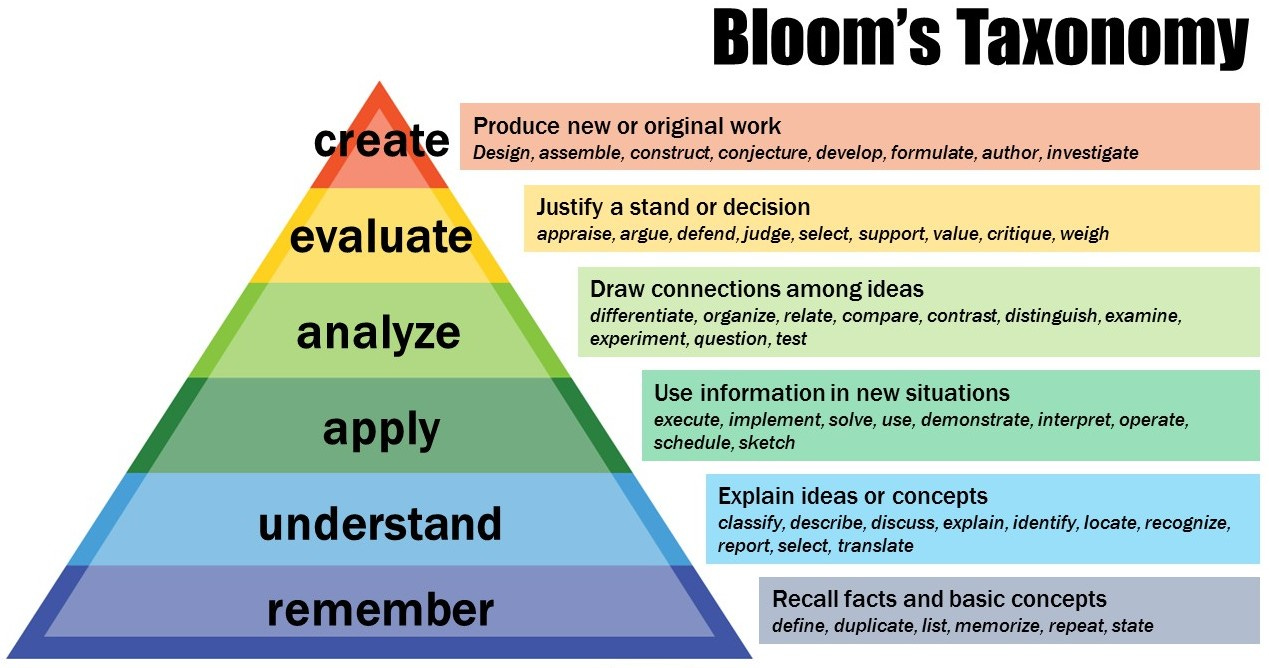

The Microsoft paper uses Bloom's Taxonomy as a framework to understand the anatomy of critical thinking. Bloom's Taxonomy helps to categorize and organize different types of cognitive skills and learning objectives, explaining how certain types of learning build on the foundation of others. It starts with more fundamental tasks like memory and recall, growing toward application and analysis at the top of the pyramid.

Technology naturally tempts us to outsource slices of the pyramid. Ubiquitous access to the Internet and Google tempts us to think that we need not remember facts and concepts. Why bother memorizing when we can always look them up? In a similar way, LLMs tempt us to outsource the rest of the layers too. Why bother working to understand a concept if we can just write a prompt and apply it directly? Why bother learning how to apply knowledge to a given situation if we can just ask AI to do it?

Most things that sound too good to be true...probably are. Some would argue that AI democratizes expertise, but that's not quite right. AI doesn't democratize expertise, it democratizes the appearance of expertise. While those two may look on the surface to be the same thing, one is solid and the other is hollow. Perhaps you can get away with applying the knowledge without understanding it, but this requires you to trust the foundation. Beware the foundation you build upon. Rock or sand? You choose.

It's one thing to outsource computation to a computer where you can at least have a well-understood model of the failure modes. It's another thing altogether to outsource understanding to a tool that generates its results based on highly likely strings of tokens. This is not to say that LLMs cannot be helpful in these areas, but it’s dangerous to view them as replacements or to rely on them too heavily.

All of this was running through my brain as I sat in my Tesla in the part of the car that will soon be known only by those of us of a certain age as the driver's seat. As my foot hovered above the pedal and my hands floated above the steering wheel, I couldn't help but wonder how my driving behavior would change on my tenth or hundredth drive ride. Surely with enough time, I would come to trust the car to take me from point A to B without issue. Surely I would become less vigilant in my oversight.

The feeling I had while riding in my Tesla is a tug that I bet all of us will be offered in increasing measure in the days and years to come. In the AI-saturated world, we’ll be continually asked to question what skills are worth learning.

It’s really not a bad thing to be forced to answer that question. But I worry especially about the students who are being told by the Tesla's of the world that they need not learn how to drive. Perhaps it's being said in hushed tones now, but the voices are getting louder. Do we know what to teach young people?

Without a doubt, some skills will become obsolete, in the same way that once you needed to know how to crank a car to start it and now you don't. In the future, you don’t even need to start the car, you just push on the pedal. But we should think through the downstream consequences carefully. If we dismiss these skills as obsolete and replace them with AI, we may end up robbing our students from the foundation that Bloom's Taxonomy suggests they need to enjoy the higher-level cognitive tasks—the activities that allow us to experience the fullness of the joy of discovery and creation.

At the end of the day, let's not miss the forest for the trees. If you outsource something, you outsource it. You're ceding control. Whatever agency AI has is from us. Let's be thoughtful about what we give away.

Got a thought? Leave a comment below.

Reading Recommendations

A few weeks ago the Vatican published a “Note on the Relationship Between Artificial Intelligence and Human Intelligence.” I finally had a chance to read it on the plane last week and highly recommend it to you, regardless of any particular religious convictions. One particular quote relevant to the discussion about agency above.

wrote a thoughtful piece on our need for deeper moral imagination in Silicon Valley.AI’s advanced features give it sophisticated abilities to perform tasks, but not the ability to think. This distinction is crucially important, as the way “intelligence” is defined inevitably shapes how we understand the relationship between human thought and this technology. To appreciate this, one must recall the richness of the philosophical tradition and Christian theology, which offer a deeper and more comprehensive understanding of intelligence—an understanding that is central to the Church’s teaching on the nature, dignity, and vocation of the human person.

is on Substack. This week he wrote an introductory piece with a good introduction to some of his work.I've sat in rooms with some of the smartest people in AI, and while their technical brilliance is undeniable, their theories about human consciousness and meaning often feel dangerously reductive. They're attempting to align artificial intelligence with human values while working from remarkably thin concepts of what human values actually are.

Our world is built on metaphor, and nowhere is this truer than in science and philosophy. This means two things: we cannot under any circumstances dispense with metaphor; and since the metaphor we choose governs what is illuminated for us, and what is cast into the shadow, we had better be very careful about the metaphors we use.

The Book Nook

American Kingpin is a crazy story. With Ross Ulbricht in the news again lately, I happened to stumble on the story as retold by Nick Bilton. What a doozy. This one is right up there with Bad Blood.

The Professor Is In

As life at home begins to settle back into a rhythm, I’m glad to be phasing back into a more normal schedule on campus. Now that we’re living closer to campus for the time being, #3 even joined me for a walking meeting with my Clinic team lead yesterday morning!

Leisure Line

When in Texas, ya gotta stop at Buc-ee’s.

Still Life

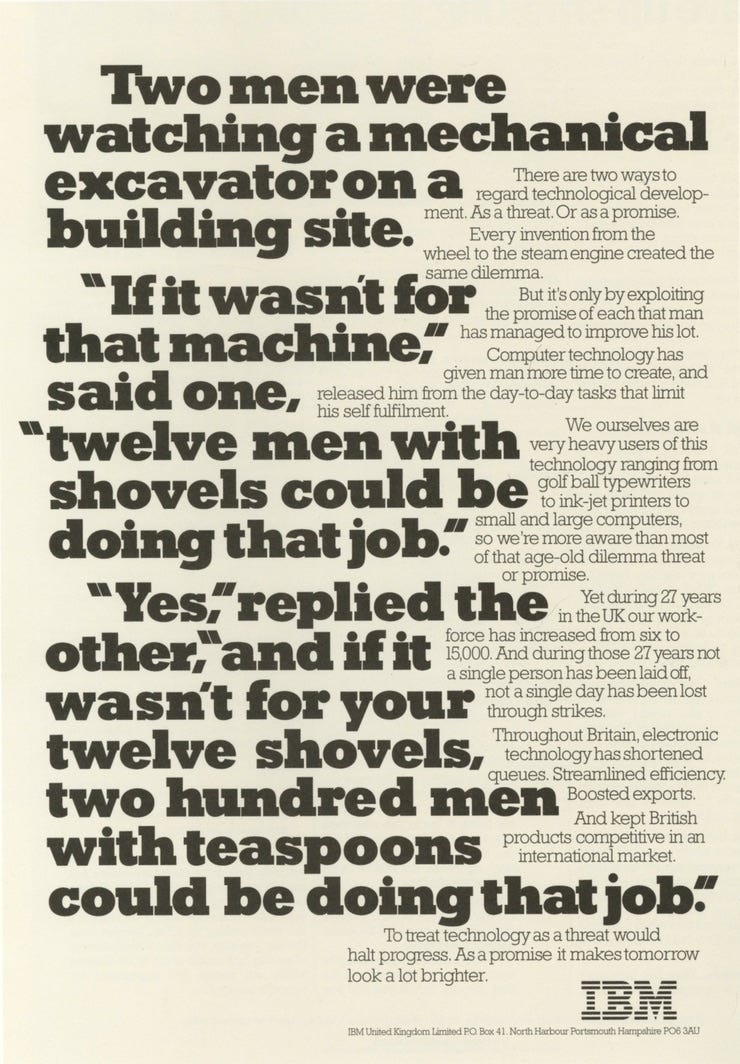

I wish I remembered where I grabbed this from. Just in case you thought that the argument for using technology to increase productivity was a recent one, it’s not.

I'm a Tesla owner and all of us were gifted a month of full self driving (FSD) back in April 2024 and then two months in October-November 2024. So I can give you a sense of what it's like to have FSD in place for longer periods of time.

FSD was an amazing thing to experience for that time. It was astonishing to watch it work, and 90% of the time it absolutely performed as well or better than a human driver. But that other 10% I was convinced it was trying to get me killed. I clearly remember one morning having it drive me to my favorite coffee shop in another town, 20 miles away and tucked into the downtown area. It navigated there flawlessly and even located, and then parallel-parked in, a prime spot on the street right next to the shop. Amazing. Then, on the ride home, it ran a stop sign and almost got me t-boned.

To me this is the perfect analogy for where we are with AI. Most of the time it's a tool that is simply mind-blowing in what it can do. And when used from the standpoint of augmenting human abilities, it's one of the few technologies that can inspire hope. I do believe that one day we will view self-driving capability the same way we now view seat belts and air bags, unthinkable to drive on the road without them. (I definitely appreciated FSD driving home exhausted and bleary-eyed after playing gigs that ended at 2:00am.) But when it's not astonishing, it's terrifying, and it's hard to know when or where that switch will flip.

FSD and AI are getting better all the time and so the question of where the human sits in the loop becomes more and more salient all the time. (In the end, I decided FSD was cool but not worth $100 a month -- it doesn't really solve a problem that I have.)

Very glad to see new rhythms emerging alongside established routines.

Assuming your many references contain references of their own, there's nearly a semester's worth of thoughtful reading here!

"Writing is more than extruding syntactically correct text. Both the product and process matter." - John Warner's new book is out --

https://www.hachettebookgroup.com/titles/john-warner/more-than-words/9781541605510/?lens=basic-books