How a Certain Scheme to Improve the Human Condition Will Fail

The intellectual and moral hazards of generative AI as understood through stories of squirrels in shredders and forest death

Thank you for being here. As always, these essays are free and publicly available without a paywall. If my writing is valuable to you, please share it with a friend or support me with a paid subscription.

The core ideas for this essay were cultivated in a conversation with Timothy Crouch. To my great delight, we both happened to post our reflections on the same day. Check out Timothy’s excellent take here.

Two stories are haunting me as I think about how generative AI is shaping our future. One is a cautionary tale about missing the forest for the trees. The other is about the tragedy that befell four hundred squirrels who arrived in a foreign country without the proper paperwork.

Unlucky forests

First, the forest. In the opening chapter of his book Seeing Like A State, the late political scientist and anthropologist James C. Scott makes his opening salvo against high modernism using a parable from the development of scientific forestry in the mid-eighteenth century. Through the lens of this framework, forests are valued primarily, if not exclusively, for their ability to produce timber for commercial ends.

What happens next is unsurprising, at least in hindsight. Seen as a source of potential revenue, the natural question is: how should we maximize it? In the pursuit of efficiency, the answer is obvious: maximize the value per square foot of forest by cramming in as many of the most valuable trees as possible. In this case, that tree was the Norway spruce. Scott again:

The principles of scientific forestry were applied as rigorously as was practicable to most large German forests throughout much of the nineteenth century. The Norway spruce, known for its hardiness, rapid growth, and valuable wood, became the bread-and-butter tree of commercial forestry. Originally, the Norway spruce was seen as a restoration crop that might revive overexploited mixed forests, but the commercial profits from the first rotation were so stunning that there was little effort to return to mixed forests. The monocropped forest was a disaster for peasants who were now deprived of all the grazing, food, raw materials, and medicines that the earlier forest ecology had afforded. Diverse old-growth forests, about three-fourths of which were broadleaf (deciduous) species, were replaced by largely coniferous forests in which Norway spruce or Scotch pine were the dominant or often only species.

In the short run, at least on the time scale of trees where a generation might take eighty years to grow to maturity, the results were "stunning." These Norway spruce, planted in the soil full of nutrients from the diverse ecosystem that preceded them, flourished and yielded a very valuable crop. Unfortunately, the second generation was not quite so lucky.

In the German case, the negative biological and ultimately commercial consequences of the stripped-down forest became painfully obvious only after the second rotation of conifers had been planted. "It took about one century for them [the negative consequences] to show up clearly. Many of the pure stands grew excellently in the first generation but already showed an amazing retrogression in the second generation. The reason for this is a very complex one and only a simplified explanation can be given.... Then the whole nutrient cycle got out of order and eventually was nearly stopped.... Anyway, the drop of one or two site classes [used for grading the quality of timber] during two or three generations of pure spruce is a well known and frequently observed fact. This represents a production loss of 20 to 30 percent."

This second-generation regression was so severe that a new word was born to describe it: Waldsterben, which means forest death. Scott sums it up nicely.

An exceptionally complex process involving soil building, nutrient uptake, and symbiotic relations among fungi, insects, mammals, and flora--which were, and still are, not entirely understood--was apparently disrupted, with serious consequences. Most of these consequences can be traced to the radical simplicity of the scientific forest.

Perhaps you can get a hint of where I might be going with this thread as related to generative AI and education, but bear with me for just one more story before I try to tie the pieces together.

Unlucky squirrels

The second story comes from the opening of another book, The Unaccountability Machine by

. It's about the untimely end of 440 squirrels who arrived at Amsterdam's Schiphol airport without the proper documentation.The story, which is truly stranger than fiction, begins with the squirrels aboard a KLM Royal Dutch flight en route to their ultimate destination to a collector in Athens. Arriving in Amsterdam, KLM discovered that the squirrels did not have the import documents required to legally bring them into the country. What to do?

After passing the question up the chain of command, the KLM received a decision from the Dutch Department of Agriculture, Environment Management, and Fishing: destroy the squirrels. So, they fed all four hundred plus squirrels into an industrial shredder.

You, like me, may rightly be asking questions about this shredder. Apparently, it was an instrument specifically designed to disappear what were deemed to be "worthless male chicks." Apparently, this was not the only instance that the airline staff had used the shredder. In the months before this particularly large batch of squirrels was killed, a number of other animals, including several other squirrels, a few water turtles, and a small flock of parakeets, had met their untimely end in this shredder.

Davies asks the question that we all are asking: what went wrong?

So what went wrong, and who was responsible for shredding the squirrels? The first question is easier to answer than the second. KLM had set up a system whereby decisions about animals with the wrong import paperwork were left to someone at the agriculture department. In doing so, everyone involved had accepted that a low baseline level of animal destruction was tolerable – which is why they bought the poultry shredder. But, in so far as it is possible to reconstruct the reasoning, it was presumed that the destruction of living creatures would be rare, more used as a threat to encourage people to take care over their paperwork rather than something that would happen to hundreds of significantly larger mammals than the newborn chicks for which the shredder had been designed.

The characterisation of the employees' decision as an 'assessment mistake' is revealing; in retrospect, the only safeguard in this system was the nebulous expectation that the people tasked with disposing of the animals might decide to disobey direct instructions if the consequences of following them looked sufficiently grotesque. It's doubtful whether it had ever been communicated to them that they were meant to be second-guessing their instructions on ethical grounds; most of the time, people who work in sheds aren't given the authority to overrule the government. In any case, it is neither psychologically plausible nor managerially realistic to expect someone to follow orders 99 per cent of the time and then suddenly act independently on the hundredth instance.

Are we destined to be the forests, the squirrels, or both?

So why exactly have these two stories been haunting me as I think about AI? In short, they illustrate just how delicate we humans are as moral and intellectual creatures. The stories of Waldsterben and shredded squirrels should be cautionary tales. But I'm not sure we're thinking far enough down the road to see how badly this could go.

So far, we've developed enough situational awareness and collective will to avoid nuclear disaster, germ-line editing of the human genome, and the threat of mirror life. But we haven't reached that point yet with generative AI. At least part of the problem is that we are continually sitting on the edges of our metaphorical seats, trying to keep up with what is going on. The pace of new developments seems only to be increasing, even as the questions about the continuing trajectory of progress continue to come into question.

Trying to keep up means that we constantly need to reassess and adjust our models. Just what will the models be able to do in six months or a year? Will the performance continue to scale with compute? What exactly will the impact of AI-generated outputs mixed into the training data of future models be?

How are we helping the leaders of tomorrow prepare?

One of my biggest areas of concern right now is about the way that we are helping our students to grapple with the impact of these tools on their expertise. Our situation mirrors that of scientific forestry in the mid-1800s. We're performing experiments that we'll only really understand the outcome of a decade down the line.

I'm willing to bet that in a decade or so, we're going to notice a Waldsterben of our own intellectual capacity. Just like the first crop of Norway spruce was unbelievably fruitful, we'll see an explosion in productivity due to generative AI. But this growth in productivity will be less about the power of generative AI itself, and more about the nutrients of the ecosystem that it is tapping into. The expertise and skills of the current experts, hard won by years of productive struggle with hard concepts, will be rich soil for generative AI to exploit. I doubt the next generation will be so lucky.

Generative AI is only as useful as the foundation of expertise and wisdom it rests upon. This is a lesson that I can't emphasize enough for the experts of tomorrow.

You should experiment with generative AI as you're honing your craft. There are bound to be ways that generative AI, just like any technology, will be able to help you build real, lasting skills more effectively. But to thoughtlessly integrate these tools is a mistake. We must be on our guard to avoid mistaking true expertise and mastery for its shallow, generative AI-powered lookalike.

Today's students are tomorrow’s experts. They are the second generation of Norway spruce. The generation that will inherit a deeply compromised ecosystem devoid of the nutrients that are needed to thrive. Let's be mindful of the price we will pay for chasing short-term productivity gains without consideration of the long-term foundation for innovation.

Wisdom over intelligence, virtue over vice

The threat of generative AI to our intellectual life is significant enough. And yet, I fear it is only a part of the story. The even bigger hazard is how generative AI threatens to undermine our moral reasoning and wisdom. What is intelligence really worth if it is not coupled with wisdom?

Generative AI is beyond the wildest dreams of the high modernist ideology that Scott critiques in To See Like A State. I can only imagine what Scott would have said about generative AI. I will hazard a guess that he wouldn't be thinking happy thoughts.

In Scott's language, generative AI is nearly tailor-fit to make the messiness of the real world "legible." The overwhelming goal of the project of generative AI is to achieve a level of abstraction using statistical modeling that can help us better understand and navigate the entirety of documented human knowledge and experience.

Feeling like a squirrel staring down the shredder yet? We're seeing some of these ideas from the high-modernist playlist roll out in real time. Whether it’s the recent on-again, off-again tariffs from the President with math that looks suspiciously similar to ChatGPT's or the latest funding cuts made by DOGE, we're seeing all too clearly the societal impact of generative AI rolled out en masse. We need not experience the singularity or invent AGI for generative AI to have a significant impact on our world as we know it.

Accountability sinks all the way down

In all of this, I see the echoes of a core insight from Davies' book: the accountability sink. An accountability sink, Davies says, is defined by the property of a decision where "nobody is to blame."

Take the case of the shredded squirrels. Surely the bureaucrats who designed the policies that animals cannot be imported without proper documentation could not have imagined that their policy would lead to the untimely end of hundreds of unfortunate bushy-tailed rodents. How could one truly hold them to account for this outcome, which was the result of an edge case that would have been nearly impossible to imagine beforehand? What about the ground crew who did the deed? What choice did they have? They were simply following orders, not only from their bosses but by extension from the government via the Department of Agriculture.

Generative AI is the ultimate accountability sink. It presents the appearance of legibility. Perhaps there is even a bit of truth to that notion. There are indeed patterns of the world that only emerge when you widen your aperture to see them. Some of those features will surely escape the perspective of the individual and require more sophisticated tools like machine learning to unearth.

And yet, the appearance of legibility without its substance is truly dangerous. It is a tool that the high modernist could only hope for: the One Ring, which gives meaning and structure to the messiness and complexity of the world and the appearance of the power to fix it. It offers a nearly irresistible temptation to outsource our moral responsibility to the machine. Who are we to question the response from a system that is trained on the entirety of human knowledge?

Resharpen the corners

One of my mentors in undergrad compared the conscience to a cube with sharp corners. The sharp corners prick you when you do something wrong. But choose to make a wrong decision even when you know better, and the sharp corners of the cube would be slowly sanded down. Intentionally do the wrong thing enough times, and your conscience would be seared—the once sharp corners of the cube ground down to nothing.

Today's sandpaper is full force. Every time we use a generative AI tool, we're implicated in the development process that brought it into being. When we deploy generative AI tools, they come with real-world impacts. The decisions that we make, from the level of government and society right on down to the individual, matter.

As we think about the impact of generative AI and the places we are choosing to apply it, we would do well to consider the subtitle of Scott's book: "How Certain Schemes to Improve the Human Condition Have Failed."

The newest scheme on the block is generative AI. I'm finding it increasingly hard to believe—despite small pockets of potentially positive applications—that the overall impact of generative AI writ large in society won't just be the latest scheme to fail to improve the human condition.

Perhaps it can be used to enhance human flourishing within narrow applications. But let’s not miss the forest for the trees. That’s the way the squirrels get shredded.

Got a thought? Leave a comment below.

Reading Recommendations

Lots of good stuff to read this week.

Don’t outsource your mind

First up is

with a great post on outsourcing our minds to generative AI.Our ability to understand material is related to the amount of effort we put into producing, analyzing and evaluating it. If you’re a student and you just read a textbook chapter, you won’t remember it as well compared to if you take notes on it and ask yourself questions about it.

The real danger as we outsource more of our mental effort to AI, is that we lose agency over our own cognitive abilities. AI is a technological breakthrough, but it’s altering the very way we think. We’re speeding forward in this new world of AI barely noticing how the landscape is shifting. While concerns about privacy, ethics, values, environmental impact, and job displacement have dominated discussions on AI, I’m struck by how we have not yet talked much about how it can affect our cognition.

Recognize your intellectual roots

While I was reading up on Seeing Like a State, I stumbled over

’s excellent review. In it, he helps to make Scott’s own thoughts legible by connecting them to their intellectual roots.Large AI models are cultural and social technologies

, , Cosma Shalizi, and James Evans wrote a fantastic piece in Science earlier this month. In their essay, “Large AI models are cultural and social technologies,” they cut through the noise and make sharp assessments on not just what LLMs are, but what they mean. Well worth a read.The narrative of AGI, of large models as superintelligent agents, has been promoted both within the tech community and outside it, both by AI optimist “boomers” and more concerned “doomers.” This narrative gets the nature of these models and their relation to past technological changes wrong. But more importantly, it actively distracts from the real problems and opportunities that these technologies pose and the lessons history can teach us about how to ensure that the benefits outweigh the costs.

Bringing computer science and engineering into close cooperation with the social sciences will help us to understand this history and apply these lessons. Will large models lead to greater cultural homogeneity or greater fragmentation? Will they reinforce or undermine the social institutions of human discovery? As they reshape the political economy, who will win and lose? These and other urgent questions do not come into focus in debates that treat large models as analogs for human agents.

Seeing like a data structure

I happened to stumble on Barath Raghavan and Bruce Schneier piece titled “Seeing Like a Data Structure.” In it, they riff on Scott’s perspective through the specific lens of data structures. Another excellent read.

As Mumford wrote in his classic history of technology, “The essential distinction between a machine and a tool lies in the degree of independence in the operation from the skill and motive power of the operator.” A tool is controlled by a human user, whereas a machine does what its designer wanted. As technologists, we can build tools, rather than machines, that flexibly allow people to make partial, contextual sense of the online and physical world around them. As citizens, we can create meaningful organizations that span our communities but without the permanence (and thus overhead) of old-school organizations.

Seeing like a data structure has been both a blessing and a curse. Increasingly, it feels like it is an avalanche, an out-of-control force that will reshape everything in its path. But it’s also a choice, and there is a different path we can take. The job of enabling a new society, one that accepts the complexity and messiness of our current world without being overwhelmed by it, is one all of us can take part it. There is a different future we can build, together.

Nailed to the virtual Wittenburg Door

Twelve wise theses and predictions about “AGI” from Timothy Crouch.

The inarticulability of intelligence has (at the very least) to do with its embodied and relational aspects. “Mind” is neither identical with nor even co-extensive with “brain activity”; rather, “mind” is (to crib from Dan Siegel’s definition) is an embodied and relational process. Emotion in particular seems, as far as the causality can be determined, to be body-first, brain-second, such that it is only articulable after the fact (and in a way that changes the emotional experience). Michael Polanyi’s great work demonstrates in a philosophical register what musicians, artists, and craftspeople have always known intuitively: that the “cognitive task” of playing an instrument or using a tool depends on integrating the instrument or tool into one’s bodily experience, in an inarticulable way. And relationship through interaction with other embodied minds is such a complex process, with so many emergent layers, that not only is it poorly theorized or modeled now, it may be impossible to exhaustively theorize or model — especially because it primarily seems to take place in and through the pre- and in-articulate dimensions of cognition.

The Book Nook

Friday before last I had a chance to join a virtual book launch event for the US release of

’ book hosted by and Bloomberg Beta. It was great fun to be in the same space with a number of folks who have been inspiring to my own writing and thinking about generative AI.You all should go buy and read Dan’s book! The sort of analysis that Dan and others like him are doing helps to put the pieces together for how we should think about generative AI—not primarily as a technological artifact, but as a social and cultural one.

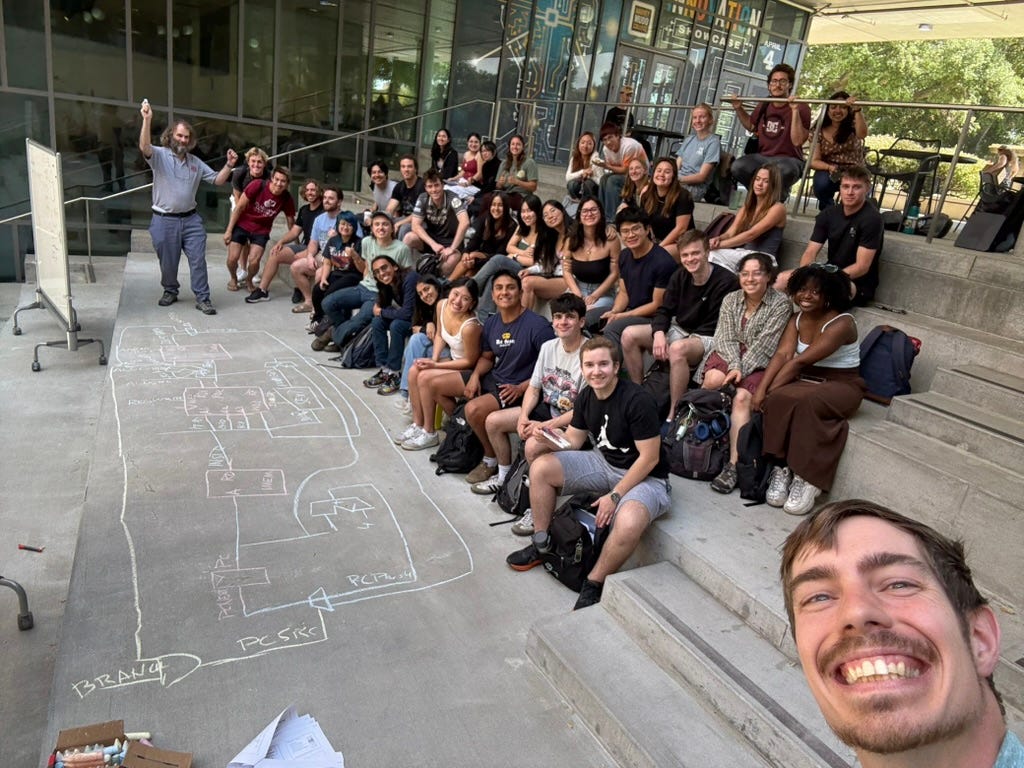

The Professor Is In

Last Friday, we took class outside. It was the first lecture in microarchitecture, the part of computer architecture that connects the higher-level ideas with the hardware needed to implement them. In this lecture, a David Harris special, we start from a blank slate and build up a simple processor piece by piece. So fun!

Leisure Line

One of the benefits of living close to campus is being within a short bike ride of the various things going on. Last week we dropped by batting practice on Thursday afternoon and a game on Saturday.

Still Life

Can’t beat Randy's.

Love the pairing of the examples and putting them in the context of education. Frankly, I think it's easy to go back farther with the forest example. Not long after the forests were maximized for production in Germany, Horace Mann visited Prussia and came up with a similar plan for education -- maximize learning by separating off each subject area from the ecosystem of learning that had been more common. That partitioning has proven to be appealing and long-lasting. Unfortunately we lost sight of the forest for the trees. AI may accelerate the decline, especially as we try to prevent students from learning basic responsibilities when encountering new technologies. I'm afraid we're sending many of them into the shredder as a result of our unwillingness to actually deal with the realities that are emerging.

This was a great essay. I am not remotely an expert on this stuff - basically tech illiterate. But there's a wonderful article by Maria Farrell (Henry Farrell's sister) and Robin Berjon from last year, which similarly opens with the forest example from James Scott's book. If you haven't read it's really worth checking out: https://www.noemamag.com/we-need-to-rewild-the-internet/

I love your analogy of the cube with the sharp corners slowly sanded down (growing desensitization). Importantly, there's a difference between a shiny new scheme that "fails to improve the human condition" like countless others, and a shiny new scheme that actually causes unmeasurable, irreparable damage with effects reverberating over generations. One concern I have with the AI stuff is that it has some potential to cause real damage, yet this damage might be difficult to assess and parse from all the other uncharted territory - especially as it becomes increasingly normalized and integrated into our social fabric.