LLMs As Glue

LLMs can't do end-to-end automation. It doesn't matter.

Thank you for being here. As always, these essays are free and publicly available without a paywall. If my writing is valuable to you, please share it with a friend or support me with a paid subscription.

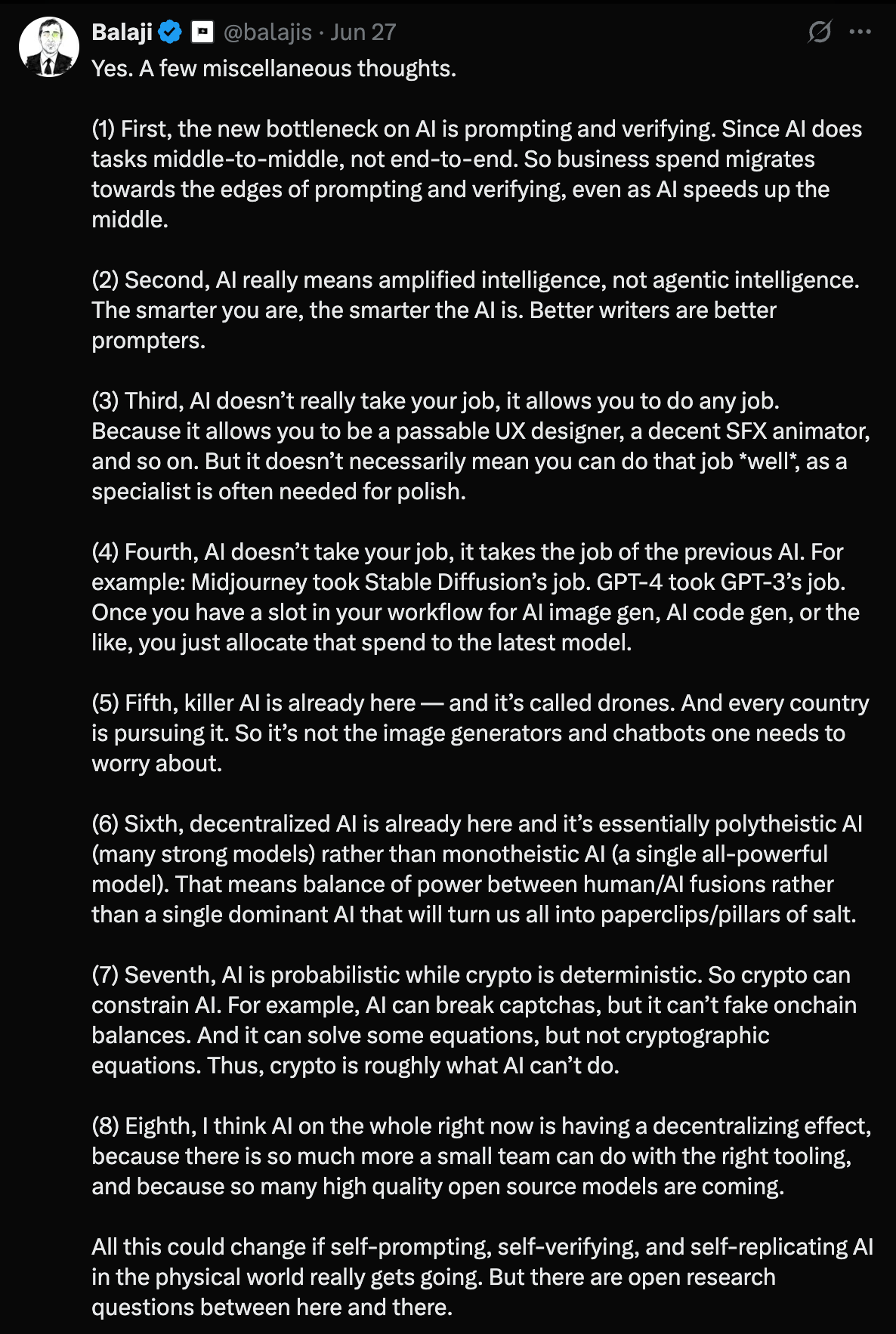

This week I've had two ideas rattling around in my head. The first was from a tweet from

.The second is a line from

. It's a throwaway line in the middle of his interview with : "common traits uncommonly together are very valuable."There's a lot to unpack here, but I want to focus on one particular point in Balaji’s tweet that is particularly salient: AI automates tasks middle-to-middle, not end-to-end.

This strikes me as one of the core distinctions right now between the reality and the vision for AI. It also seems to separate the AI amateur from the professional. If you're not using AI but drinking the marketing cool-aid, you'll stoke the illusion that AI can completely automate tasks for you by completely replacing you. But this is not how automation works, and this is not how AI will automate our tasks.

Together, these observations suggest a few predictions about where we're likely to see generative AI roll out most successfully in the world over the next few years. My hunch is that the biggest opportunities for most people are not waiting for some big advance that will find the holy grail of end-to-end automation, but rather a more humble (but no less impactful) future where LLMs become the glue to amplify human intelligence.

LLMs as Glue to Amplify Intelligence

LLMs are like the body of a Swiss Army Knife, not a magic wand. They are the glue that holds together a variety of other tools that are already very good. You've got databases which are already excellent for storing structured data, search engines which can find and return specific webpages, and a whole host of other programming tools that are solid instruments with specific applications.

If you, like me, are sympathetic to the argument that LLMs will not be the foundation for general reasoning or whatever definition of artificial general intelligence (AGI) is hot today, then a few questions emerge. What then is an LLM good for? And how should we use it?

The "LLMs are not intelligent" drum is one that folks like

, Yann LeCun, (among others) have been beating for some time. I wrote a bit about an excellent podcast conversation with Chollet that I found very enlightening. At their root, LLMs are, as insightfully calls them, "flexible interpolative functions mapping from prompts to continuations." The interpolative part is important and is a key piece of Chollet's argument, too.My basic mental model for an LLM is very sophisticated autocomplete. LLMs will be most useful not as a magic wand to automate tasks end-to-end. While this is the Silicon Valley pipe dream—and in many ways often the implicit promise in many of the advertisements around AI right now—the truth is that LLMs are not some sort of human-like intelligence. They cannot reason, think, read, write, analyze, criticize, or do many of the verbs that are often attributed to LLM-powered tools.

In fact, they can only do one thing: flexibly interpolate from prompt to continuation.

But here's the thing, it doesn't matter. Just because an LLM cannot reason or think doesn't mean it’s useless, in the same way that a car is still useful even though it cannot fly. (It also doesn't mean that it is harmless.) What matters is that you use the thing according to its strengths and not its weaknesses. Use the LLM to interpolate from prompt to continuation. Drive the car.

As it turns out, there are many instances where the ability to process and encode natural language is extremely useful. There are many places where unstructured data is the norm and where being able to flexibly interact with a computer without needing to pay close attention to the syntax that it requires is very helpful.

As a simple example, you can imagine that a typical function in a programming language requires that you list the arguments in a specific order. There is no reason for this other than the fact that the computer requires you to do so. What the LLM can do (and quite well in my experience) is flexibly deal with a certain input and translate it to a structured output.

This, in my mind, is one of the major use cases for LLMs. The low-hanging fruit that I see ahead of many of us is not some sort of massive LLM-powered super intelligence that we interact with through the standard ChatGPT or Claude interface. It's more like a custom workflow automation built with n8n or Zapier that uses the LLM for specific tasks within a bigger workflow. It turns out that these sort of automations are quite powerful, and given the extensive application programmer interfaces (APIs) that proliferate the web, we already have the pieces to plug in to build some powerful apps and programs without needing to throw the whole task to the LLM and hope that it will do the right thing. Adam D'Angelo, CEO of Quora, former CTO of Facebook, and fellow Caltech alum, had a tweet go viral this week announcing a new position at Quora that does just this sort of thing.

As I look over this next year, I've got my eye on finding tasks that are valuable not because of what they signify (e.g., the time spent drafting and authoring a personal recommendation letter), but because of what they accomplish. These sorts of applications are in the same vein as solving the scheduling challenge with a tool like Calendly (or my personal favorite Neetocal).

Once you start to look for these sorts of tasks, the real meat of the question is not what you will automate with AI, but what you will not automate with AI. Your work gets divided into areas of application and non-application. This has obvious applications throughout education and is a current that has run through much of my past writing about AI in educational spaces.

Once you start to see the LLM as glue, the barriers to entry begin to shrink. LLMs won't give you expertise, but they do give you just enough ability to be dangerous. Lots has been said about the ways that LLMs extend your ability to code, but it's not just about being able to code in English. LLMs amplify everyone's programming ability.

For general middle-to-middle automation, you could do a lot worse than learning a little n8n and playing around with some of the simple LLM integrations available. Equipped with a little bit of n8n skill and just enough LLM understanding to be dangerous, you can prototype lots of little apps. Even more importantly than the apps themselves, if your experience is anything like mine, the act of prototyping will fuel your curiosity to keep on learning new things.

The Opportunities on the Jagged Frontier

Maybe it's obvious how Balaji's tweet factors into this post, but where does Puri's line enter the mix? In a few ways.

First, we are living in a moment of technological instability. It is a jagged frontier. There has never been a better time to think about uncommon combinations of common traits. AI lowers the barriers to many combinations that until now would have been hard to imagine, much less act upon.

Secondly, the fact that LLMs will never in and of themselves lead to end-to-end automation means that what is a "common trait" is also shifting. Using a chatbot interface to the latest and greatest LLM is about as easy as it comes. That trait will become increasingly common. And because it will be common, it will not, on its own, be valuable. Rather, figuring out how and where you can apply the middle-to-middle automation that LLMs enable will be the new advantage. Curiosity and a little bit of exploration will become even more valuable than they already are.

LLMs are not intelligent. They do not reason. They do not feel. They are not like humans. They are, in fact, extremely sophisticated (and quite brilliant) algorithms that extend and continue a series of words that we put into them. Once you give up on LLMs being a magic wand, they're actually quite useful. The biggest challenge is getting past the magical thinking and understanding how they actually work. Only then can you actually put them to work.

Got a thought? Leave a comment below.

Reading Recommendations

I will almost certainly write about this talk from

on the ways software is changing again in the era of AI sometime soon. It’s jam packed with really sharp insights and mental models, perhaps the best of which is the idea of Software 3.0.If you’re curious to learn more about n8n × AI, here is a helpful video to get started with.

The Book Nook

My reading habit is still on life support. Still slowly but surely making my way through The Devil and the Dark Water by Stuart Turton. Guess I’ve been spending too much time playing with AI.

The Professor Is In

Last week was a whirlwind with three days in New York at the start of the week and a trip to San Diego this last Friday and Saturday. Always fun to see how my students manage to take ownership of their work when I’m gone, and I’m excited to see the way their projects are shaping up.

Leisure Line

When in Corando, go to Clayton’s Coffee Shop. We discovered this place a few years back when we first explored Coronado and went back again this trip. So good.

Still Life

On the beach at Coronado. Hard to beat San Diego in the summer.

Thanks, this is a helpful analysis that gives me some more words and concepts to share with others but also to structure my own thinking. What's foremost on my mind now in an educational sense is how to get younger people entering the world to commit to developing the skills they need to be capable of using generative AI well--and to find what makes them one kind of 'uncommon' mind that people want to glue to an assembly.

Big agree! "Just because LLMs cannot reason or think doesn't mean it's useless."

The chatter about AI reasoning feels like such a red herring to me. Who cares/Does it really matter that much? I get that we should continue to research the inner-workings and understand how it maps its outputs (etc etc), but this focus completely overlooks what you so succinctly describe in this piece.

I like to focus on "sophisticated pattern matching" to help me "approach it well" and get some use out of it. In other words, it's analyzing my inputs for patterns, checking its data for similar patterns, and then reformatting an output that aligns the two. I think that aligns with your approach but let me know if not.

Another way I try to frame this is -- Imagine if you had a friend who could do that pattern-matching pretty well - on-call 24/7 -- but they occasionally made mistakes and didn't really understand your underlying purpose for the work you did? Or the nature of perspective and experience? You might not chat with that friend all day, every day -- in fact that would be quite exhausting -- but you would certainly have some fascinating conversations with him/her and gain insights into your own thinking from them. That's how I think of GenAI. That aligns of course with the "mirror" concept that is fairly widespread at this point. But I've been trying to reframe it even further into a "sparring partner" approach which forces me to consider these gaps in experience, perspective, and purpose -- while also creating the conditions to reveal user/student thinking on the page. (This was the crux of my student-facing AI activities a few years ago.)

https://mikekentz.substack.com/p/the-butler-vs-the-sparring-partner

In any case, great piece! Thanks for sharing. And Nick Potkalitsky has a good piece on this from a few weeks ago too, if interested.