Mental Models Matter

The importance of understanding LLMs as flexible interpolative functions from prompts to continuations

Thank you for being here. As always, these essays are free and publicly available without a paywall. If my writing is valuable to you, please share it with a friend or support me with a paid subscription.

I'm sitting down to write the first draft of this essay having just given the last lecture in the Digital Electronics and Computer Architecture course (E85) that I've been co-teaching this semester. One of the things I love most about this course and the field of digital design and computer architecture writ large is its beauty. Before you roll your eyes and snicker "nerd" under your breath, just hang with me.

Bust all the black boxes

At the beginning of the semester, we start by learning basic boolean algebra and exploring how we can represent information in zeros and ones. We continue by building on top of that foundation, first by adding logic gates made out of transistors. These logic gates are ways of performing simple operations on inputs, giving us outputs that are true when both inputs are true (AND), when either input is true (OR), or any number of slightly more complicated logical operations.

Computer architecture is one of the most cumulative fields that I've ever encountered. Everything is built on top of what comes before it. As we continue to march through the semester, we leverage the principles of abstraction to combine smaller subsystems to make more and more complicated devices. First, we use logic gates as a building block for describing combinational logic: functions where the output depends only on the current inputs. Then, after introducing a simple structure to store a single bit of data, a flip-flop, we use the concept of finite state machines to build even more complex systems that can output values that depend not only on their current value but on past values as well.

Little by little, piece by piece, we continue to build up these elements until, in the final few weeks of the course, we build a processor from the ground up. Although this processor isn't nearly as advanced as the specialized, highly-optimized chip that is inside whatever device you're reading this on now, the processor we build in class is fundamentally of the same kind.

But what's most magical about this process is that the entire process is comprehensible. There are no magic leaps or black boxes in the pipeline. While we do abstract away some of the semiconductor physics that governs how the transistors work, everything else along the way is built one step at a time, piece by piece. It's like building a LEGO set.

Transparency aids understanding

The process of building something from scratch like this is eye-opening. Even if the students don't build something with the same level of complexity as the latest processor running on your computer, they understand the process needed to get there and how this impacts what a processor can and cannot do.

For instance, building a processor in this way disabuses students of the concept of multitasking. We often casually think that our computers are doing multiple things at once. It sure feels that way. But at the foundational level, the only way for multiple things to happen in parallel is for there to be multiple separate processing cores. One processor means one instruction at any given moment.

When you bust the black boxes and drill down to this level of understanding, it helps you not only to understand what a processor does, but what it is good for. What seems like an emergent property that might otherwise amaze you (e.g., the ability for my computer to handle the input from the keyboard that is putting this text on the screen while running a complicated data processing script in the background and playing music), goes from something that seems magical and unknowable to something that can be understood and analyzed. Understanding how a processor works shouldn't make us any less amazed at what it can do, but this understanding gives us a deeper and wiser perspective on how to use it well.

Busting the black boxes of generative AI

We must apply this same thought process to generative AI if we are to use it well. Over the past several years there has been much hay made over what might be called the "emergent" properties of generative AI. To be clear, the things that these algorithms can do are truly astonishing. But it's astonishing in the same way that a computer processor core is astonishing. In other words, it is marvelous, but not magical.

If you were to look under the hood of a large language model, what you would find is not all so different from the processors students build in my class. At its core, generative AI is built on top of deep learning. Deep learning uses neural networks that perform computations using lots and lots of matrix multiplications.

But if you were to keep breaking the black boxes, at the end of the day you would find something that is fundamentally identical to what is inside your computer. Yes, the pieces are arranged in a different way to make the parallel operations that are the bread and butter of machine learning more efficient. This is why Graphical Processing Units (GPUs), which are intrinsically designed to efficiently perform the same operation on lots of data in parallel, are much more valuable than Central Processing Units (CPUs) for AI applications. This is marvelous, but not magical.

Even at what we might call the emergent layer, sitting high above the low-level matrix multiplication that makes it tick, we must also resist the urge to attribute magic to what is happening.

The common wisdom is that the best way to work with an LLM is to treat it as if it were another person. This may work, but it's fundamentally deformational. We ought to instead form mental models for our AI tools that help us align our use with what they actually are, instead of what they might seem to be.

An LLM may be able to generate an output that looks similar to something a human would produce, but the process by which it produced it is nothing like the process that a knowledgable human would use, whatever the latest and greatest "reasoning" model would suggest. At the end of the day, as

says, these models are "flexible interpolative functions from prompts to continuations."The way it works matters

In case you haven't had your coffee yet today, it's worth unpacking that a bit. Although it's at a different layer of abstraction, the point Brad is making is essentially the same point I am trying to make about processors: what is happening under the hood matters.

As we think about LLMs and how best to use them, understanding what these things are doing matters. What they do is take in an input (a prompt) and then compute the next most probable words to follow it. If you ask a question, the next most probably words will be something that has the form of an answer to that question.

But what's most important to recognize is that this output of the model is not an answer in any conventional sense of the word. LLMs are not solving a search problem where they are matching questions and answers. LLMs are built on a fundamentally different structure than an algorithm like PageRank which illustrates the core idea behind search engines. PageRank helps to rank existing webpages based on a measure of relevance. LLMs are computing words that are most likely to come next.

If we want to use generative AI wisely, we need to understand what is doing. Form and function are connected, and if we are to understand the areas where AI can be applied productively we need an appropriate mental model to understand what it is doing. Perhaps even more importantly, this understanding will highlight areas where AI should not be used because the way it works cuts against the grain of the purpose we are pursuing.

At the end of the day, if the statistical correlation between your prompt and a particular part of training data could yield some useful information, then an LLM can open up a whole new world of possibility. There are many areas where this can be useful, but understanding how the LLM works helps to explain why it is fundamentally untrustworthy.

The correct mental model matters.

Got a thought? Leave a comment below.

Reading Recommendations

This week I stumbled on an essay from Olin College of Engineering professor

, “Civic Virtue among Engineers,” published in the Virtues & Vocations Magazine. It was a great read. In it, Graeff highlights some of the work he has been doing at Olin College to cultivate virtue in his students.I believe the next chapter of engineering education requires making good on the promise of a liberal education and recommitting to a holistic definition of higher education’s public purpose. Engineers may run experiments in a vacuum, but their work does not exist in one. There is no dualistic separation of social and technical concerns as Cech and decades of science and technology studies scholarship note. This demands we prepare engineering and computing students in ways that integrate their personal and professional identities into what educational philosopher Carolin Kreber calls an “authentic professional identity.”

The Book Nook

Technology and the Virtues by Shannon Vallor has been on my reading list for a while now but has moved up to the top after reading the essay I linked above by Erhardt Graeff. We are very much in need of this kind of conversation about how technology is shaping who we are becoming.

The Professor Is In

The end of the semester is upon us. Summer is almost here!

Last Friday I was on a Clinic site visit with my team from this year, celebrating the good work that they have done this year. It is always a joy to see students reaping the fruits of their labors throughout the year.

Leisure Line

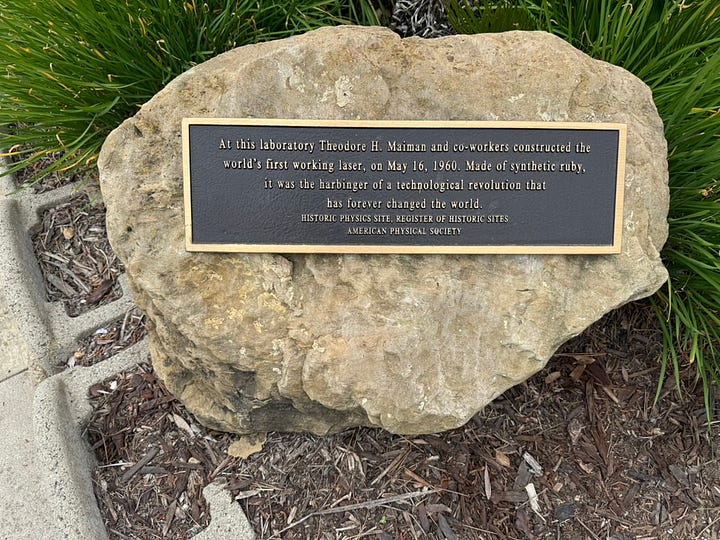

Pretty cool to see not one, but two plaques memorializing the creation of the laser on our Clinic site visit trip.

Still Life

In the category of “life never stops being an adventure,” we discovered a group of bees decided to look for a home with us this last week. We appreciate the pollinators, but not quite so close.

I read again this morning in Ursula Franklin Speaks, her emphasis on constructing understanding from study of elements. I can see her teachings in this post. I’m an English teacher, but I see the transfer pedagogically; the value of this slow, hands-on, collaborative, bottom-up philosophy in helping students to feel both free and humble is immeasurable.

This was useful - thanks! I appreciate the thoughts and the analogy to processors. I think this approach will be useful in helping students understand what LLMs are and are not.