They're Called Teachers

Students don't need a sophisticated word salad mirror, they need a person

Thank you for being here. As always, these essays are free and publicly available without a paywall. If my writing has been valuable to you, please consider sharing it with a friend or supporting me as a patron with a paid subscription. Your support helps keep my coffee cup full.

There's lots of talk about how AI has the potential to revolutionize education. But most of the conversation focuses on how to use AI rather than talking about the prerequisites needed for those AI tools to be beneficial.

When I look at an AI-powered educational tool like Khamigo, I agree with the core assumption: to learn, students need to be engaged. But I’m not convinced that AI, at least in many of the ways we are seeing it applied, can actually solve this problem. Authentic engagement of humans requires other humans. Perhaps there is a way for that engagement to be mediated through a technology like AI, but ultimately, the most effective way to engage one human is through another.

We already know this. Access to information is not the problem. We live in a world where almost all of human knowledge is available at our fingertips through the Internet. In the not-so-distant past, massively open online courses (MOOCs) were the way of the future, curating the world’s information through the lens of the most talented teachers and communicators. Now they're mostly gathering dust on our digital bookshelves. The content is there, but we lack the motivation to engage with it.

The real problem is not one that can be easily solved by technology. Technology may be part of the solution, but it is not the solution. The problem is not, by and large, a problem on the supply side. The real problem—one that is much harder to solve—is a human problem. It’s the problem of cultivating intrinsic motivation so learners want to learn and giving them the support they need to engage in the productive struggle and failure that is a natural part of the learning process.

Focus on the engine, not the transmission

In this respect, we're spending all our time and effort building a slam-dunk transmission without spending time considering the engine that's powering it. The transmission is a vitally important part of a car, enabling an effective transfer of energy from the engine to the wheels. But you can squeeze every last ounce of performance out of the transmission and it’s only as good as the engine that's powering it.

If we map this analogy onto learning, the engine is the student's input—primarily time, energy, and attention. Knowledge and the tools to learn it as packaged in courses, lectures, textbooks, homework, projects, labs, and practicums is the output, the drivetrain that helps to move the student along their educational journey.

If we're not careful—and I don't think we're being careful—we'll end up using AI to solve the wrong problem. We need to be putting our design thinking caps on and asking "To what problem is this AI tool the solution?" If we do this, we'll see that most of those tools, whether they be educational applications of general-purpose generative AI tools like ChatGPT, Claude, or more specialized custom chatbots focus their energy on improving the transmission. They take an existing input from a student and enable them to create something more impressive with the same input.

The real opportunity for AI in education is not to make the transmission more efficient but to design a system that will stimulate the engine to become more powerful.

As we do this, we'll need to be mindful that education is a human endeavor. There are certainly students who are innately curious and driven to explore the world and learn. All they need is access to the internet or a textbook and they'll be intrinsically motivated to learn the material. But if we're honest with ourselves, this is not a large fraction of our student population. These students are already served well by the existing resources. Just point them in the right direction and they'll go for it.

What we need, is systems and structures which can help to create intrinsically motivated learners. And this is where I fear that AI tools, on their own, will fail. This is not to say that they can't be part of the solution, but designing a system that can write personalized mad-libbed word problems using players from your favorite sports to teach you math won't foster the type of authentic engagement that moves the needle.

What we need is not more personalized chatbots, but more persons. We need more teachers who are able to connect with individual students. Teachers who can sit down next to them and sense that they are tired and that they don't need a math problem, but a nap. To recognize when they are dealing with family challenges or otherwise burdened and to ask them how we can help support them.

In other words, students need humans walking beside them.

No matter how good these AI tools become, they will never become human. Even if when they become so finely tuned that it is impossible to tell the difference between their responses and those of another human, they still won't be human. And when it comes to motivating other humans, this makes all the difference.

True education is incarnational. We can teach others because we were once where they are. We understand not only what they don't yet know, but the path to get there. Not only that, we understand what it is to struggle and to fail along the way.

I remain unconvinced that a chatbot can ever be truly motivational—unless of course, we are willing to delude ourselves. Perhaps these tools can help us to understand ourselves more clearly. That I am willing to grant. But to the degree that they motivate us, it is because we are motivating ourselves. It's fundamentally no different than looking ourselves in the mirror and giving ourselves a pep talk.

Students don't need a sophisticated word salad mirror, they need a person. They need someone to help them to see the path forward. To point them in the direction to go. And to walk beside them on the journey there.

They don't need a robot cheerleader, they need a human helper.

We have a name for those. They're called teachers.

Got a thought? Leave a comment below.

Reading Recommendations

This interview with Shannon Vallor on Alan Alda’s podcast Clear and Vivid is fabulous. Dr. Vallor is the Director of the Centre for Technomoral Futures in the Edinburgh Futures Institute and holds the Baillie Gifford Chair in the Ethics of Data and Artificial Intelligence in the University of Edinburgh’s Department of Philosophy. In the conversation, she talks about why we should be asking critical questions AI and how to dispel the illusions.

This essay from

over at Every raises some interesting questions about AI to stoke our curiosity to explore new things. She writes about how the new AI-powered platforms which enable you to quickly prototype an app created a gateway for her to explore coding.The rise of vibe coding tools has dramatically widened a messy, in-between space where you have the ability to build just enough, to experiment and tinker without having to commit to years of learning.

The Book Nook

As we slowly get all our books cleaned, unpacked, and back on the shelves, it’s like slowly rediscovering old friends. It’s particularly fun when the kids get excited to see a book that they forgot they loved. One of the rediscovered favorites this week was Thank You, Omu!. One of their favorites, and mine.

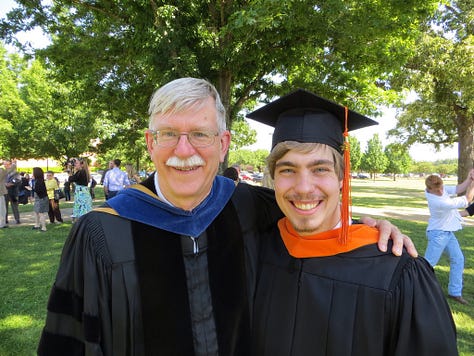

The Professor Is In

Last Wednesday I had the chance to visit Biola University for the day. I enjoyed getting to speak to their student body in chapel and interact with their faculty, staff, and students.

Leisure Line

It feels good to be back in the saddle. After the disruption of the past few months, the pizza oven has been living in a corner in the garage. No more. This weekend I cleaned it off and got it back up and running. Happy to say that despite the lack of recent practice, I’ve still got the touch.

Still Life

When we were in Old Town Pasadena on Monday morning, we checked out Delight Bakery. The name fits. This raspberry croissant was delightful and delicious.

In a podcast with Daisy Christodoulou on using AI to give students feedback on their essays, she found that using automation to grade essays and provide feedback saps students of motivation -- they want to know that someone they esteem (their prof) is reading their paper. If a computer is doing it, then why bother? Similarly, years ago while researching the importance of the doctor-patient relationship, I read about a conference where participants were asked what they want from their doctor. The vast majority of respondents indicated they want "to be heard." I think that what's we all want from our various relationships and personas: to be heard. By a person, not a computer.

We've recently published a Position Paper at Oxford University Press on this theme - the Human Connection: Motivation and Social Learning. In the paper, we recognise the ways that technology has helped many learners - including enhancing their motivation to learn. But we also need to remember (as you say) that human interaction has the biggest impact on our motivation to learn. In my area of language learning, the motivation to learn a skill for human interaction is clearly even more affected by this. Here's a link to the paper (sorry, it'll ask for your email address first): OUP The Human Connection: motivation and social learning