Stop, start, continue: Alternative grading in an upper-level engineering elective

A new alternative grader reflects on his first semester

Today’s guest post is by . Josh is a professor of engineering at Harvey Mudd College in Claremont, CA about 30 miles east of Los Angeles. In addition to teaching engineering and running a research group in biophotonics, Josh writes a weekly Substack newsletter, The Absent-Minded Professor where he writes about technology, education, and human flourishing, all through the lens of a prototyping mindset. He also welcomes you to reach out and contact him via email at jbrake@hmc.edu.

There’s something about August that gets me into renovation mode. As the summer begins to wind down and I start to see the fall semester looming, I feel the urge to tear my courses down to the studs and rebuild. Most falls that means making changes in ENGR 155: Microprocessor-based Systems, an upper-division engineering technical elective that I’ve taught each fall since starting my position in 2019.

ENGR 155, known affectionately on campus as MicroPs, is the course where students put their digital electronics knowledge into practice through a series of labs and a final project completed on small teams. In past years I’ve mostly focused on course content, changing some of the labs or updating a few lectures. This year I tore out my old assessment system and tried out an alternative grading scheme.

Today I want to share some of my reflections on how things went and what I’m planning to change next year to try and make it work even better. I’ve enjoyed the framework of Stop, Start, and Continue that David and Robert have used to categorize their reflections in the past. I’ll adopt those headings for my reflections.

Stop: Relax the limits on the number of times students can revise their work.

Start: Provide students with a way to better assess their learning in the class with a progress tracker that offers opportunities for reflection on their learning throughout the semester.

Continue: Maintain transparent and clear specifications that each assignment must satisfy and give students flexibility on how they complete their work.

But before we can talk about those lessons we’ve got to talk about the course itself. What is MicroPs?

What is MicroPs?

To understand where MicroPs fits into our curriculum, it’s helpful to have a little background on the overall structure of the engineering major itself.

Harvey Mudd’s engineering program is focused on producing generalists, building on the college-wide identity as a liberal arts college. We’re serious about our identity as a general program: we don’t offer degrees in specific sub-disciplines of Engineering like most other programs.

After completing the college core curriculum courses in their first year, engineering students begin to take major classes that focus on content within multiple engineering disciplines, as well as modeling, analysis, design, and professional practice. Beginning in their third year, students take at least three technical elective courses to develop depth in particular subfields of interest. MicroPs is one of those technical elective courses, and focuses on the integration of technical content in an open-ended design space.

The class is normally taken by 20-40 students each year. The first half of the course focuses on applying the skills they’ve seen in a previous digital electronics class in a series of labs completed each week. Then the second half transitions into an open-ended project completed in teams.

There are only a few requirements for the projects: build something fun or useful that uses the hardware platforms that the students learn in the class. Students build all sorts of things, but the most impressive projects tightly integrate sensors and actuators or leverage the ability for digital hardware to perform highly optimized computations. As one example, one team this year built a volumetric display that generates 3D images by quickly rotating a 2D screen about an axis. If you want to learn more about the projects, you can check out the project portfolio websites the students built for their projects or a YouTube playlist of their project videos.

But that’s enough about the students’ projects this year, let's talk about my prototype in MicroPs this fall: implementing an alternative grading scheme.

Alternative Grading in MicroPs

I’m already in a rhythm of prototyping and continuously working to improve MicroPs by making adjustments each year. During the pandemic, I expanded the specific technical content for the hardware platforms we work with in the class and replaced the traditional final report with a website portfolio. This year my attention turned to grading.

Deep into my renovation somewhere in the second week of August, I read Specifications Grading by Linda Nilson. You should definitely read this book. Just not two weeks before class is going to start. After you read this, it’s unlikely you’ll be able to leave your grading system alone and you’ll have your work cut out for you.

Specs grading really resonated with me because it helped me align my assessment practices with the course learning outcomes more clearly and communicate them more effectively. For example, when designing an engineering system, it’s critical that it reaches a certain minimum threshold of performance. The yes/no intrinsic in specs grading helps to emphasize this all or nothing property and communicate this to students. Specs grading also provides a structure to clearly and specifically communicate the aspects of excellent work and offer a clear way for students to know the specific ways they need to revise their work if they are unable to meet the specs on their first attempt.

I was largely convinced about the value of alternative vs. traditional grading systems before reading Specifications Grading. My main takeaways were less about the rationale and more about specific templates for implementing a system that would work for my class. The two most important lessons I learned were how to implement credit/no-credit grading for individual assignments and a strategy to translate performance on individual assignments to a letter grade. The many examples in the book gave me a clear vision of how to do this.

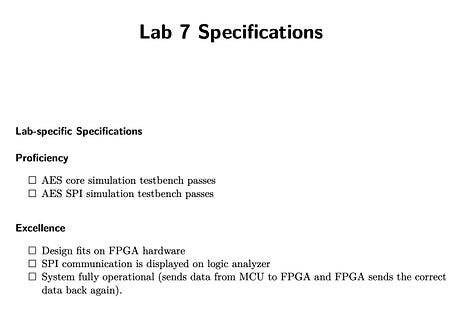

Here’s how the grading system I built works. Students earn their grades in the class by submitting a sufficient number of assignments in both the labs and project categories that meet a certain specification level. At a base level, students need to complete a number of assignments that meet the proficiency (P) specs. Then, to achieve a higher grade, they need to complete a subset of those assignments to a higher level of excellence (E) specs.

For example, to earn a C, they must complete at least 6 of the labs to the level of proficiency specs. Of those 6, at least 2 must meet excellence specs. In addition to meeting the requirements for a C in the labs bundle, they also need to meet the project requirements. For a C in the projects bundle, they also need to complete 4 out of the 5 project deliverables to the level of proficiency specs and 2 of those must meet excellence specs.

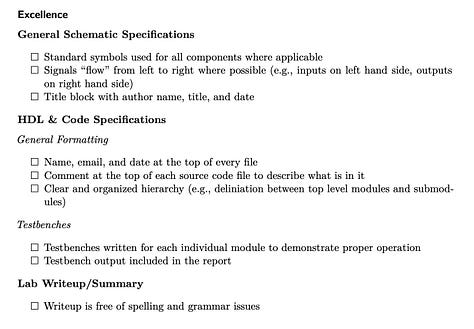

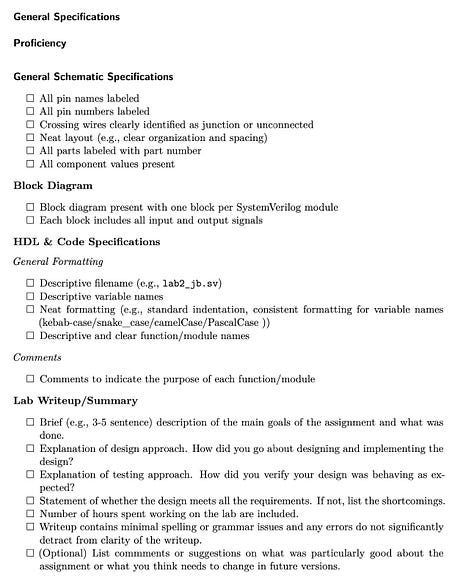

The specs for an individual assignment look something like this. They contain both technical aspects like passing certain verification tests and more general requirements for the documentation and supporting information like well-commented code and clear electrical schematic drawings.

The specs for each assignment are a long list of statements that describe what a successful assignment looks like. They’re designed to be aligned with learning goals (e.g., good documentation) and to explicitly communicate what achieving those learning goals looks like in practice. They’re naturally aligned with another pillar of my pedagogical foundation: transparent teaching.

Stop, start, continue

Hopefully you now have a flavor both for the course itself and what the specifications for an individual assignment look like. If you’re interested, more details can be found on the course website. Earlier this fall I also wrote about why alternative grading resonates with me and how it is rooted in my days being homeschooled as a kid. You can read about that here:

In this last section, I want to share some of my reflections now that the semester has come to a close.

Stop: Relax the limits on the number of times students can revise their work.

At the start of the semester, I didn’t have a strong sense of how students would react when given the freedom to revise and resubmit their work. Conscious that allowing an unlimited number of revisions could create a significant grading burden for me and also undermine incentives to keep on schedule with their work, I landed on a token system. This system gave them two different types of tokens: one extension token and two retry tokens.

The extension token allowed them to take a one-week extension on any lab, no questions asked. I hoped that this would allow students a release valve for the busy weeks in the semester when everything piles up all at once or if they had some non-school-related travel scheduled.

The retry tokens allowed them to resubmit revised versions of their work. For this token, they could work further on a lab that had met the minimum proficiency specs but hadn’t yet reached the requirements for the excellence level.

The short version of my conclusions here is that my fears were overblown. A subset of the students didn’t use any tokens and for those who did, I don’t think that they would have needed more than one, or at most two, reassessments. Next year I’m considering removing the limits on retry tokens and allowing students to reassess their work to raise it from proficiency to excellence whenever they would like. I’ll still keep one extension token to ensure students stay on track with their work while offering some flexibility and agency over their deadlines.

All this being said, I don’t think that I made a poor choice in limiting these tokens for my first iteration of this new grading system. As David wrote last week, it is wise to limit the number of revision opportunities available to students, especially when you are running the first prototype of an alternative grading system which relies upon it. However, this advice, while sound as a starting point, may not be the best choice in your individual circumstances. The important thing is to consider how your plan for revisions will best support your students within the limited time and resources you have at your disposal.

Start: providing students with better ways to track their progress

I’ve been enjoying the recent series from

where she talks about the progress tracker she has developed for her students to use. In essence, it’s a document with a number of tables to help students see where they are in the class and understand their progress toward the course learning goals. This addresses a common source of anxiety by giving students clarity about where they stand in the class. A progress tracker like Emily’s helps students keep a 50,000 foot view of their progress in the class.In addition to tracking specific assignments in the class, I’d also like to use this progress tracker as a place for students to document their own personal reflections on their learning. Self reflection is a critical part of learning and I want to provide more opportunities for students to do that throughout the semester. Since MicroPs is an elective class, it’s likely that they all have specific reasons for taking the course and I want them identify these goals at the beginning of the course so that I can help to support them in their own individual learning journeys.

Continue: maintaining transparent and clear specifications to help students understand what excellent work looks like

Even though there are things that I want to stop or start, overall I am very pleased with the way that this first prototype went.

Here are three things that went well this semester that I look forward to continuing next year:

Support of transparent teaching practices: alternative grading supports the explicit communication of what good work looks like. This puts me in a position to work with students to help them meet or exceed those standards of excellence.

The writeups and documentation were the best quality that I have seen in the five years I’ve been teaching the class. This showed me that a significant part of the problem was on me for not clearly communicating what good documentation looks like.

The technical work was also executed at a high level. I set the bar and students rose to the challenge. This was borne out in the ambitious final projects. Not only did the students shoot for ambitious projects, but they executed them.

Go forth and prototype!

I’ll come right out and say it: you should start looking for a place to experiment with alternative grading in a course you teach next term. I can’t promise it will be easy or go off without a hitch, but I can guarantee you that you will learn something important, even if you scrap it all and go back to whatever grading system you are using now—and I bet you won’t.