10 Questions That Educators Should Be Asking About AI

If I was in charge of casting a vision for addressing AI at Harvey Mudd, here are questions I'd urge us to be asking

Bad advice about integrating AI into your teaching abounds. But what I’m really getting tired of seeing is folks treating an LLM like ChatGPT as an intelligent agent without any consideration of the underlying technical architecture that makes it good or bad at a particular task. It’s critical to have at least a basic understanding of what a tool is before you decide how and why you are going to use it. Without at least some basic literacy, you’re bound to make unfortunate and avoidable mistakes when deciding how to approach it or integrate it into your pedagogy.

There are a few issues here, but what I keep seeing come up over and over again is that people don’t think to look under the hood. Sure, the text that an LLM can generate in response to a prompt is pretty amazing, but you’ve got to look past the initial spit and polish and ask what’s actually going on in order to move forward wisely. If you’re looking for a good primer, I’d recommend this visual explainer from the Financial Times.

If you understand that an LLM at its root is a computer program that is really good at guessing what word or phrase should come next in a sequence based on the prompt or previously generated words so that it sounds good, it should come as no surprise to you that it is intrinsically really bad at math. If it happens to get a math question right, it’s just because it somehow has gotten lucky at guessing. Without plugging into an external service like WolframAlpha, the underlying architecture of an LLM just is not a reliable way to do mathematics.

Computers lie through their teeth

This whole thing reminds me of the first time that I learned that using a computer to add 0.01 together 100 times does not give you 1.

At first, this seems ludicrous. How on earth could a computer not be able to compute an answer that any middle schooler could probably compute without issue? Don’t believe me? Check it out.

The issue is the fundamental architecture under the hood. Computers use floating point numbers in binary (base 2) to represent numbers. This means that if a number cannot be exactly expressed as a power of 2, you’ll get errors because of truncated, and hence imprecise, representations1. This is a fundamental and yet counterintuitive fact about how computers work.

Once you understand how a computer works, it’s plain as day that this error is present. You can make it less significant by using more bits in your representation, but the problem will never go away completely because it is inherent in the design of the system itself. I still will always remember Dr. Larry Anderson in my Numerical Methods class in undergrad: “Computers lie through their teeth.” Don’t understand how a computer works or represents numbers? You’ll trust it when you shouldn’t. Eventually, it will come back to bite you.

Hopefully, this simple example helps to illustrate why we need to be careful of trusting LLMs and the outputs they generate. Of course, the problem is that we know a lot less about how LLMs are working under the hood than we do about how floating point math happens. This is still an open area of research and so we need to move forward with caution, curiosity, and with a questioning attitude.

As I’ve been seeing the latest round of bad takes about AI in education make their way through my inbox, I decided that I’d try to take a crack at a list of my suggestions that I hope might serve as better suggestions. These are by no means an exhaustive list and I would be glad to hear your feedback or pushback on any of these, but if I were in charge of making a plan to address AI at my institution, here are ten questions I would be telling my colleagues to ask about AI.

1. How do AI tools fit within a broader framework of the history of technology?

Those of you who have been here before know that the best thing that I’ve read on AI this year was written over thirty years ago by Canadian physicist Ursula Franklin. In her 1989 CBC Massey Lectures The Real World of Technology, Dr. Franklin presents a sweeping and incisive critique of the influence that technology has had on our world and elucidates the ways that designers of technology so often misjudge the impact of their work.

The reason for this, she argues, is that we all too often neglect the deeply interconnected nature of our technology with the broader culture and societal structures surrounding it.

Manufacturers and promoters always stress the liberating attributes of a new technology, regardless of the specific technology in question. There are attempts to allay fear, to be user-friendly, and to let the users derive pride from their new skills.

To make her point she uses the development of the sewing machine as an example. It started innocently enough:

The introduction of the sewing machine would result in more sewing — and easier sewing — by those who had always sewn. They would do the work they had always done in an unchanged setting.

Unfortunately, technology is part of a larger system of culture and society. As such, we need to make sure that our analysis of its impact is focused not just narrowly on the productivity of a single user, but on the broader influence that the technology has when it reaches a critical mass of adoption. At that point, the surrounding social, cultural, political, and economic systems begin to strongly shape the ultimate broader influence of a particular piece of technology.

Reality turned out to be quite different. With the help of the new machines, sewing came to be done in a factory setting, in sweatshops that exploited the labour of women and particularly the labour of women immigrants. Sewing machines became, in fact, synonymous not with liberation but with exploitation.

We’re in the early days of AI adoption in education. In many ways right now we’re in a situation not unlike the one where the sewing machine is being used by the woman at home to help them sew more easily and more quickly. But this phase is only an inflection point on the arc of technology development. Even now we are seeing AI enter a phase of broader adoption. We’d better keep our eye on the history and patterns of previous technological innovation if we want to avoid some of the less desirable impacts.

A final haunting paragraph from Franklin:

The early phase of technology often occurs in a take-itor-leave-it atmosphere. Users are involved and have a feeling of control that gives them the impression that they are entirely free to accept or reject a particular technology and its products. But when a technology, together with the supporting infrastructures, becomes institutionalized, users often become captive supporters of both the technology and the infrastructures. (At this point, the technology itself may stagnate, improvements may become cosmetic or marginal, and competition becomes ritualized.) In the case of the automobile, the railways are gone — the choice of taking the car or leaving it at home no longer exists.

2. Are your policies around monitoring AI use supporting or undermining a culture of trust?

Trust is one of the most important features of a successful classroom. This is one of the reasons that I think using tools to scan your student’s work for AI-generated content is wrong on purely philosophical grounds. Even in a world where there exists a tool that can perfectly detect if and how a student used AI in their work—and newsflash, that world doesn’t exist today—how does filtering students’ work through that system serve either the teacher or the student? If the system flags AI-generated content, this sets up a painful confrontation between the teacher and the student, made worse by the fact that these tools can’t reliably predict the difference between LLM and human-written text.

I realize that this touches more generally on the ideas of academic integrity. It’s self-evident to me that we need to create structures that foster virtues of honesty, integrity, and fairness in our students. It’s also important that we ensure that students are not gaining unfair advantages over their peers and maintain the meaning of our degree programs as a marker of a certain level of achievement.

But are confrontational tools like plagiarism detectors really the best way for us to achieve this aim? On the whole, do they do more harm than good?

I still remember needing to feed my essays in high school through Turnitin’s online plagiarism detection system to verify that I properly cited the text in my essays that came directly from other sources. Even this is not so great since it undermines the student-teacher relationship of trust, but it’s much better than feeding student data to the current crop of tools that claim to delineate AI-generated from human-written text.

What’s the end game with using these tools? Does the specter of these tools actually help to intrinsically cultivate the character we want or are we just setting up a combative dynamic that erodes trust?

3. How accurate or reliable do AI-detection tools need to be for the advantages to outweigh the disadvantages?

Of course, the elephant in the room is that the previous question above is moot. There are no tools that can reliably detect AI-generated content. Not even close.

OpenAI themselves can’t develop a tool to do it and pulled their AI classifier tool in July of this year. If OpenAI can’t do it, do you really think that Turnitin.com can?

Stop for a moment to consider the economic imperatives and consider how those might shape the story too. The core business of the whole company is plagiarism detection. If they can’t at least claim to detect AI-generated text then they're in big trouble. Straightforward plagiarism seems almost quaint at this point when ChatGPT can generate pages upon pages of text at the click of a button. If companies like Turnitin want to survive, what choice do they have but to release a tool to flag this content even if it is of questionable accuracy?

To put the question more concretely: how accurate would an AI-detection tool need to be for you to consider using it? It’s one thing to consider using a tool that could tell you with 100% certainty whether a student has used AI. I still think that could be a bad choice depending on your rationale for it, but at least the technology itself wouldn’t be the issue. But in our current situation, no such tool exists. And so you need to ask yourself how many students you are willing to falsely accuse of using AI for every 100 students you correctly identify.

4. How are you involving students in your conversations around AI?

In my experience, the more I can frame my teaching and learning as an activity where I am partnering with students and walking alongside them the better. This is one reason why I’m so excited about the alternative grading techniques I’ve been exploring recently. This post yesterday from

in a guest post for had a line that resonated with me on this front.Growth-based grading means that I am not an evaluator but an encourager.

As we talk about AI and how we are approaching it, we should pay particular attention to what voices are in the room (not to mention what voices are represented in the data these tools are trained on). In the same way that we should value and seek out feedback and perspectives on AI from diverse perspectives and backgrounds, we should also consider how we are giving students a voice at the table.

In this vein, I appreciate the efforts of the folks at

. Earlier this fall they hosted a virtual online conference where they brought together voices from across the spectrum to weigh in. This included not only faculty and students in higher ed but also K-12 educators and companies working to build these technologies in the ed-tech space.These types of interactions help to build coalitions and common ground between the various stakeholders. In the end, we may not agree on the details of the specific policies that are implemented, but we are much more likely to find policies that are well-considered and likely to best serve the various stakeholders in the process.

5. What are the reasons motivating your AI policies?

One of my core values as an educator is transparent teaching. I’ve written before about how transparent teaching is a natural antidote to the challenges presented by AI tools like ChatGPT or other technologies that might challenge our classroom culture.

It’s likely that every class will now need to have a specific AI policy to lay out how you plan to engage with AI in your classes.

and others have done a great job of laying out a framework of the options that are available. In broad strokes, you can ban, embrace, or critically engage with AI. In this case, I think that the right option will likely be in the category of critical engagement, but that will look different depending on your context. Here’s a copy of the policy I’m using in my class this fall in case you want to take a look at how I’m approaching it.If you teach writing, critical engagement might look like generating text with AI and then critiquing it. This would lean more towards a ban. As a computer scientist, you may find more value in leaning toward embrace, seeing how the tool might help you to code more quickly, acting in essence as a more powerful autocompletion tool. As an engineer, you might find ways to use it to help make suggestions in the design process and help you to think outside the box as you ideate as Julia Lang and AMP reader Dustin Liu have done in their guide on using ChatGPT for Life Design.

The point is that whatever your decision, it should be thoughtfully considered. AI will be a part of our future so ignoring it isn’t a tenable option. The only option that is really on the table is figuring out how we want to address it.

6. What structures are you cultivating in your context for curious exploration and critique of AI tools?

I think it’s hard to imagine a future that isn’t significantly influenced by AI. In many ways, you could argue that we are already seeing the early returns in the present from some of our first interactions with the algorithmic content curation that is at the heart of our social media feeds and online shopping experiences.

In this frame, I think the question is less if we will engage with AI in our courses but how. Connecting to the previous question, there are a variety of options we can choose when deciding how to approach AI. First, we need to decide how we are going to engage with it but then we need to figure out the systems and structures within which we want to structure our engagement.

The design thinking tools embedded in a prototyping mindset are useful here. A design process is a good example of the types of feedback loops that are helpful for fostering thoughtful and purposeful learning. Although there are many variations, most design processes incorporate the following steps which are part of the Stanford hexagons.

This iterative feedback loop is a helpful guide to shepherd our critical engagement with AI tools. First, we must reflect on what we know and empathize with our target audience. In the context of a class, an instructor must put themselves in the shoes of a student. Students should do the same for their instructors. Then, from a shared foundation of empathy, we can move forward to figure out what the problem is, some creative ideas of how to address it, and some concrete prototypes we can embody to test the ideas out. As we learn from our mistakes along the way, we can repeat this process to learn how we might discover both the positive and negative impacts of AI.

7. To what problem is AI the solution?

I love the way that this question flips the script. This question is adapted from a similarly-titled first-year writing class “To What Problem is ChatGPT the Solution?” that

is teaching at Harvard this semester.The provocative framing helps us to see that we are in the all-too-common situation of holding a hammer and looking for nails. This is in many ways an archetypal problem of technology. So often the tools that we develop are less a hammer created to drive a nail, than a hammer looking for a nail to drive. We create the tool and then find applications for it.

The problem with this approach is that the tool itself acts as an implicit lens which is all too easy to miss. Here Marshall McLuhan’s famous phrase “the medium is the message,” first brought to my awareness by Neil Postman’s book Amusing Ourselves to Death, rings resonant.

eloquently writes about this in his book Stolen Focus. Reflecting on McLuhan’s quote he writes:What he meant, I think, was that when a new technology comes along, you think of it as like a pipe—somebody pours in information at one end, and you receive it unfiltered at the other. But it’s not like that. Every time a new medium comes along—whether it’s the invention of the printed book, or TV, or Twitter—and you start to use it, it’s like you are putting on a new kind of goggles, with their own special colors and lenses. Each set of goggles you put on makes you see things differently.

How are the goggles of LLMs shaping the way you see the task of writing?

8. How can AI tools be used creatively to support student learning within the classroom?

My original take on approaching ChatGPT was to use it as a ladder and not a crutch. The point that I was trying to make is that we should use AI tools to extend our capabilities but not to create unhealthy dependencies. However, in the months since, an exchange I had with a colleague challenged me to reconsider the metaphor of the crutch from a different perspective.

My original take was focused on the potential problems with crutches: if they’re used improperly, they can cause harmful dependence. But what my colleague pointed out was that the crutch is actually a quite helpful and beneficial technology in almost all situations where it is used. If used properly, it is a godsend when you would otherwise be unable to easily get around when recovering from an injury.

In this light, maybe we should use AI tools like a crutch. While we still need to be mindful of how we are using the tools, I can imagine a situation where these AI tools could be used to help provide students with additional support to help them catch up on a particular topic that they haven’t yet grasped at the needed level for a particular course. The concerns about the accuracy and quality of the generated content notwithstanding, this lens has got me thinking about ways that I might leverage AI to especially help support students who might be struggling with the content in my courses.

9. How does AI impact your core learning goals?

Y’all are probably sick of me talking about it at this point, but I’m going to once again plug

’s book Range. As I’ve written before, I find that Epstein makes a convincing case for the value of a liberal arts education, a case that is becoming even stronger with the recent advancements of AI.The core of Epstein’s argument is that we need to focus not on specializing but on generalizing. When we overspecialize, we become fragile and unsteady. It’s sort of like standing on one leg instead of standing firmly on two legs with your knees bent in a ready position waiting for a ball to arrive on your side of the court.

In a world where AI is disrupting industries, we need to be in the equivalent of the “ready position” in our contexts. The ground is shifting under our feet, but with a broad enough foundation we can deftly react and adapt.

My prediction is that over the next few years, liberal arts colleges will be some of the best poised to respond to the disruptions of AI. Students who are just now entering college need to be especially mindful of staying balanced and building a strong foundation instead of trying to gear up to catch where they expect the wave to be in four years when they graduate. Choosing a major right now in knowledge work fields is a particularly challenging game because things are shifting so quickly.

Even if you don’t think there’s any there there to all this stuff about AI, other people do and are making decisions accordingly. This is sort of like the toilet paper craze in the middle of the pandemic. It wasn’t rational to panic buy toilet paper except for the reason that everyone you knew was panic buying toilet paper.

Take this reflection from

in a recent post on his Substack about a CEO’s take on the impact that he expects AI to have on his future hiring decisions: “Most of our employees are engineers and we have a few hundred of them… and I think in 18 months we will need 20% of them, and we can start hiring them out of high school rather than four-year colleges. Same for sales and marketing functions.” It’s ironic to me that this statement was made by Chris Caren, the CEO of Turnitin, but take that for what it’s worth.10. Who is part of your circle of advisors on AI in education?

The only constant is change. As you think about AI, it’s critical to try and sort through the noise and hype and try to figure out what is really going on in order to ensure that you’re taking a wise approach.

One of the wonders of the internet is that you can build your own set of virtual coaches and mentors just by doing a little web browsing. Here’s a list of some folks that I’ve appreciated in this space. If you have others who have helped you to think through these ideas, please send them along in an email or a comment!

- : Ethan writes about AI in his Substack and is one of my go-to sources for thoughtful perspectives on AI in education. What I appreciate about Ethan is that he is a prolific prototyper. He is constantly reporting back on experiments and studies that he and others are doing, helping to make his information concretely informed by real data.

- : John writes in a few different places and has shaped my thinking on AI ever since I first came across a piece in his newsletter titled “ChatGPT Can’t Kill Anything Worth Preserving.” In addition to his excellent Substack, he writes a column at Inside Higher Ed which is full of great advice on how to address AI. He’s also got a book in the works Writing With Robots, which, if it’s anything like The Writer’s Practice, I’m sure to love.

- : Jane is the Director of the Harvard College Writing Center and writes a Substack newsletter . I already mentioned her provocatively-titled first year writing course “To what question is ChatGPT the answer?” One piece from Jane that I’ve especially enjoyed in the past few months is where she analyzes a Sam Altman tweet and asks questions about writing and learning to write in the age of AI.

- : Marc has been a consistent source of thoughtful analysis of AI and writing at his Substack,. Marc has been part of spearheading an AI Institute for Teachers at the University of Mississippi and has since developed an online course on Generative AI in education. Recently Marc has been digging into how we may need to move beyond the analogy of the calcutor as AI tools are becoming increasingly able to process and output content in various forms.

Anna Mills: I first came across Anna’s work on Twitter where I noticed she was all over curating and sharing information about thoughtfully considering how AI should impact your teaching. You can learn more about Anna and her work at her website.

- : Lance embodies a full-on experimental embrace of AI in his writing and thinking process. Over at his newsletter,, he documents how he is using AI. It’s worth keeping tabs on to see how you might consider experimenting with AI in your context.

- : Gary Marcus writes at his Substack and elsewhere. He tries to wade through the morass of AI hype and help his audience to understand what is actually going on. Gary has been studying and writing about AI for a long time and is well worth checking out.

Come connect on Bluesky!

Lately, I’m moving more and more away from Twitter and spending more of my time over on Bluesky. Bluesky, for those of you who haven’t heard of it, is a new social media platform that is similar to Twitter but is built on top of the AT Protocol. It has some pretty neat features that allow you to choose different custom algorithms for generating your news feeds.

Bluesky is still in an invite-only, but I have a few codes if you’d like to try it out. Feel free to ping me and I’ll send one over!

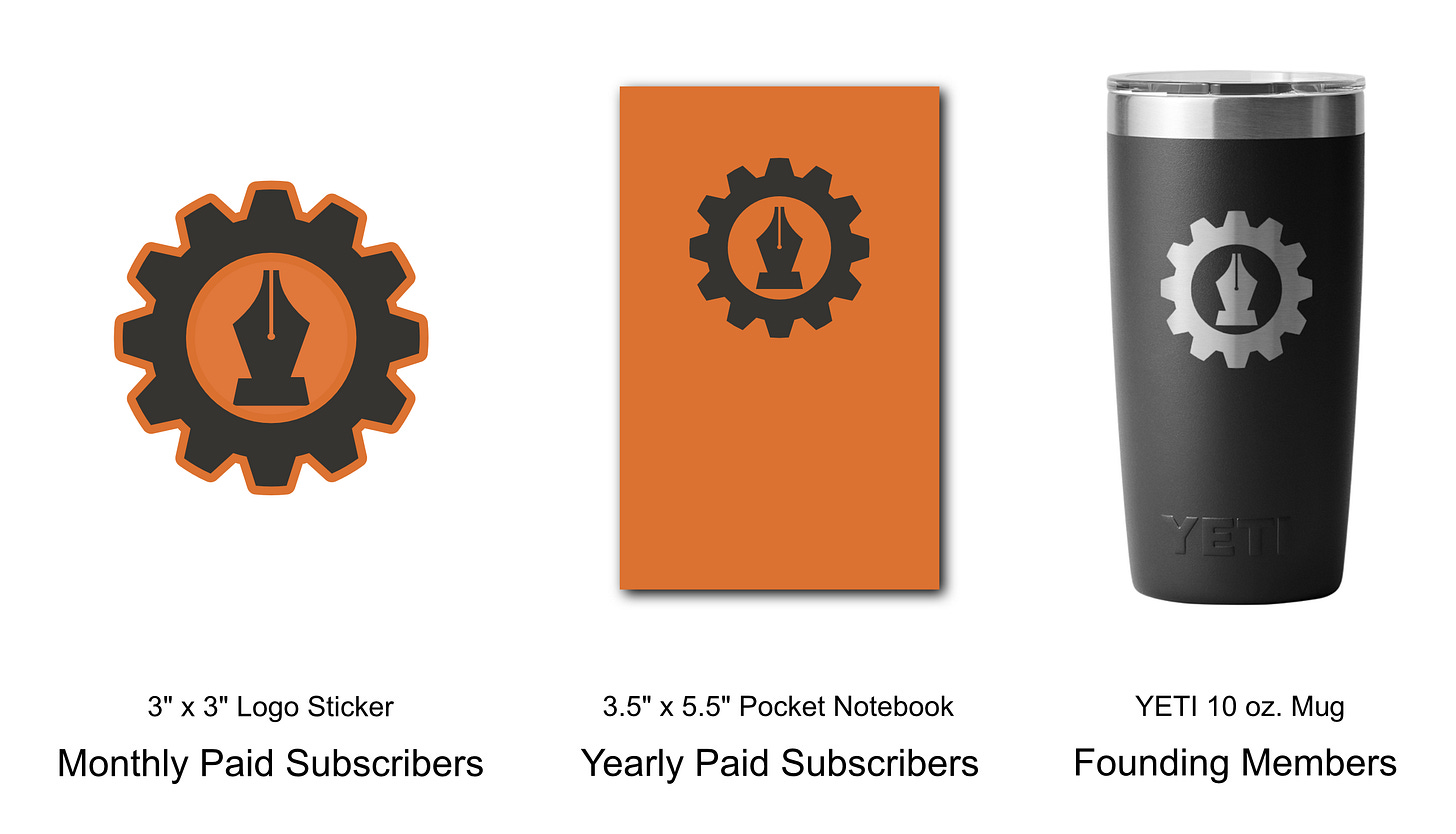

Last thing: Absent-Minded Professor Gear

Over the weekend I spent some time designing some new items to offer as a token of appreciation to patrons of the newsletter. There are three tiers:

Stickers for monthly paid subscribers

Pocket-size notebooks for yearly paid subscribers

A YETI 10-oz vacuum walled mug for founding members

If you’re interested in supporting my writing, for the next seven days I’ve set up a discount of 20% off your first year. After you upgrade, you’ll receive a link to send me your mailing address so I can send these out when they arrive! Existing subscribers will hear from me in the coming days with information on how to let me know where to send your gear! Thanks as always for reading and for your support.

The Book Nook

The latest book for our murder mystery book club was Five Little Pigs by Agatha Christie. While some members of our group were not super excited about this one, I really liked it.

A quick spoiler-free plot summary: a painter is murdered. His wife goes to prison for it. Fast forward 16 years and now their adult daughter is trying to understand what actually happened and confirm her mother’s innocence. She enlists the help of our mustached friend Hercule Poirot to recreate the past and figure out what happens. The story follows Poirot’s efforts to reconstruct the past by putting together the stories of five main characters in an effort to paint a picture of what actually happened.

I love Christie’s books and likewise liked this one. Enough information to put some good hunches together but not enough to make you feel overly confident that you’ve got it all put together.

As an aside: we’ve been figuring out how to schedule our discussions better as we’ve been going along. This time we met for our first meeting after we’d all read about 2/3 of the way through which gave us enough information to make some well-informed theories but not enough to give away the ending.

Next up is Iron Lake from William Kent Krueger.

The Professor Is In

This week is fall break at Harvey Mudd so we have no classes on Monday and Tuesday. Glad to have a little space to recharge and spend more time with the family and catch up on some items on the to-do list.

Leisure Line

Another Saturday, another trip to Randy’s Donuts. If they had a loyalty program, at this point I’m sure I would have earned at least a free dozen.

I particularly enjoyed the seasonal pumpkin spice donut with cream cheese icing on top!

Still Life

This shot of Caltech Hall (formerly known as Millikan Memorial Library) at Caltech reminded me just how beautiful Caltech’s campus is.

This is the same thing that happens when you try to represent a number like 1/3 in decimal (base 10). You get 0.33 repeating which goes on infinitely without ever terminating.

Thanks for the shout out and for the very useful post!

Thanks for the shout out. Probably the most thorough post I’ve seen looking at AI and pedagogy.