Thank you for being here. As always, these essays are free and publicly available without a paywall. If my writing is valuable to you, please share it with a friend or support me with a paid subscription.

While I was airborne from LA to New York a few weeks I watched

's recent talk at the YC AI Startup School. Karpathy, one of the founding members of OpenAI and the former director of artificial intelligence and Autopilot Vision at Tesla, knows a thing or two about AI. But more than his technical expertise, I appreciate his ability to zoom out and see the big picture. It’s something we need to do in the context of education, too, especially as we grapple with the near-term impacts of LLMs on our work in educational spaces.To be sure, generative AI will continue to develop as new techniques are invented and refined. But the core engine that is driving the current wave of innovation is the Large Language Model (LLM). We are certainly beginning to see the limitations of LLMs, but it would be shortsighted and naïve to think that we fully understand LLMs and their potential use cases yet. What we do need is mental models to help us understand what LLMs are. Only then can we chart a course to help us navigate the choppy waters of the next few years, for our own sakes and the sake of our students.

The Evolution of Software

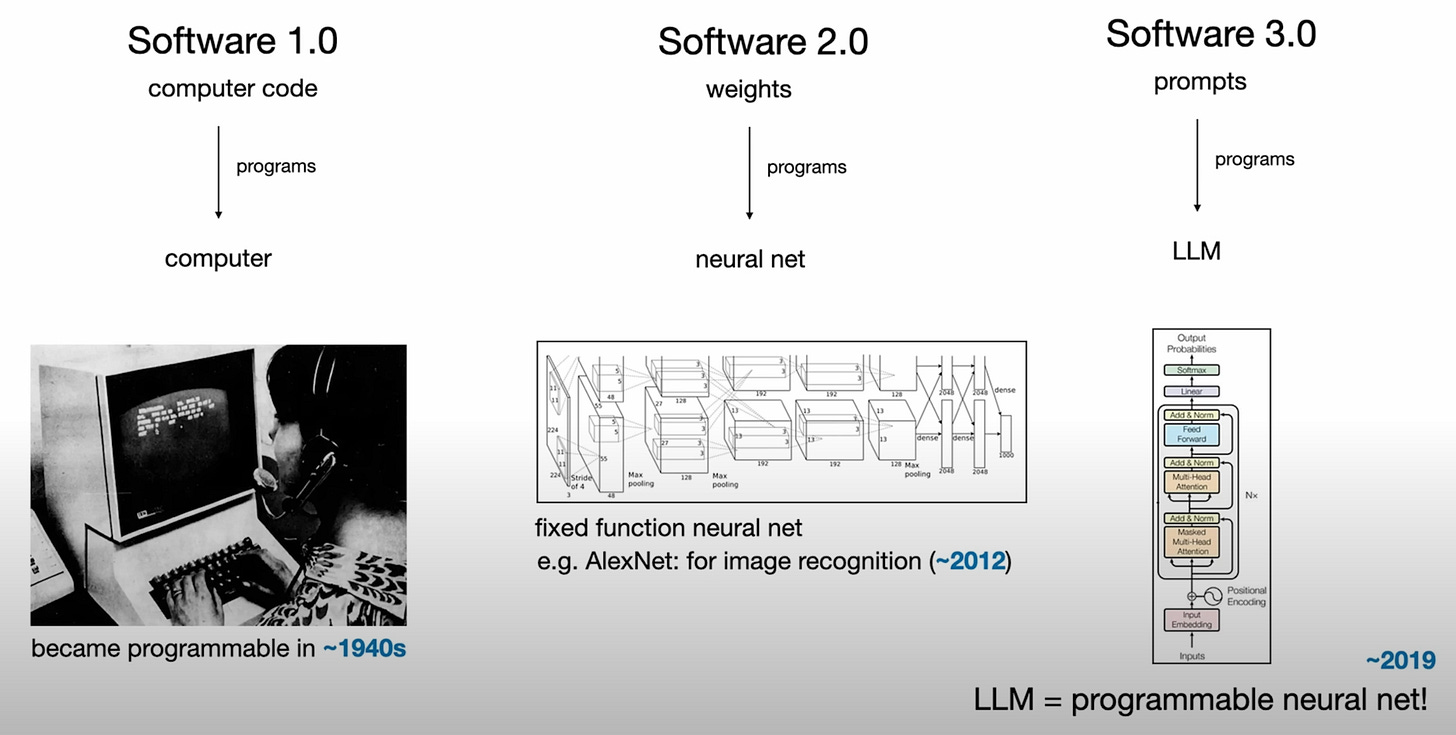

One of the reasons that Karpathy’s talk is worth your time is the way he synthesizes what is going on in the AI space right now and puts it into historical context. One of the most salient ideas in the talk is what Karpathy calls Software 3.0. In Karpathy’s model, LLMs are a key part of the next era of programming, building on and working alongside more traditional models of programming as codified in what he calls Software 1.0 and 2.0.

Software 1.0 is the earliest incarnation of modern computing. In this model, processors execute code. This is the kind of programming that we typically think about. We write code in a particular computing language (e.g., C or Python) that is ultimately converted to assembly and then machine code to be run on a processor core. This is the kind of programming that I teach in my digital electronics and computer engineering class, where we build a RISC-V processor core from the ground up.

The second era of software, what Karpathy calls Software 2.0, emerges in the 2010s. In this era, the computing architecture of choice is the neural network. While a processor is configured by machine language instructions, the "program" to configure a neural network looks different. It is composed not of a series of instructions to be executed, but of a collection of weights which are found by iteratively tuning them to fit a particular set of data. The neural network powers many of the breakthroughs that we've seen over the last fifteen years, anywhere deep learning has been applied. Software 2.0 is what runs your recommendation algorithms and performs functions like facial recognition and labeling in your photos app.

As Karpathy looks toward the future, he suggests the next frontier is emerging. This is what he calls Software 3.0. In this era, the Large Language Model (LLM) is the computing architecture of choice. Once again, the way we interact with the architecture (aka the program) takes a new form. In Software 3.0, a program is not a series of instructions or a collection of weights connecting neurons, but rather a series of tokens. Colloquially, a prompt, which the LLM continues. These prompts, while often simply strings of words, are a more general concept. To be more precise, these prompts are composed of tokens, and the continuation can take many forms, including image, audio, and video.

Programming as an Engineer and an Educator

As an engineer, computing has always been an important part of my problem-solving toolkit. As time goes on, it becomes an increasingly important one. The ability to write and understand software is one of the most powerful engineering skills in our modern world. As the physical world continues to become increasingly integrated with the digital one, this will only become more true.

What we are facing now in the Software 3.0 world is yet another opportunity to revisit and reinvestigate not only what we are building, but who will be building it and why. Setting aside my engineering hat and putting on my educator one, I’m continuing to ask the bigger questions about LLMs.

The first is that if you are prompting an LLM, you should think of yourself as a programmer. To come at it from another angle, this means you specifically should not interact with an LLM as if it were another human intelligence. It is a machine. A computing architecture, to be specific. A sophisticated machine, to be sure, but a machine nonetheless.

What it means to be a programmer continues to blur and shift from Software 1.0 to 3.0. In Software 1.0, the code was static and was designed to operate deterministically. Given a specific input, you could always predict the output. With the advent of Software 2.0, this deterministic input-output relationship was broken. Now the model would assign a certain probability to a particular input-output pair. This is further loosened in the Software 3.0 world, where the underlying transformer architecture that pulls so much weight in the LLM engine is, by design, imbued with a degree of randomness. This randomness is a feature, complete with desirable and undesirable effects.

With all this in view (and as someone who is still largely a novice in the Software 2.0 world), I’ve decided that it is important to dip my toes into the world of Software 3.0. Not just to write about LLMs, but to use them and play with them from a variety of angles. I would encourage you to do the same. Even if you are strongly opposed to LLMs and all that they stand for, there is a certain amount of learning and reflection that is best accompanied by personal experience. And so, to reflect on the potential impacts of Software 3.0 on education, this week I dove in head first.

Pulling the LLM out of my toolkit

Last week, I wrote about one way that I see LLMs being a useful tool, as glue to connect pieces of software engineering pipelines that can be otherwise very challenging and time-consuming to connect. This week, I played around with vibe coding.

Vibe coding, a phrase coined earlier this year by Karpathy, describes a certain mode of LLM-assisted programming that is in many ways the natural end of his 2023 tweet that "the hottest new programming language is English."

The basic idea is that you do not need to know anything about the low-level details of what the computer is doing, but rather that you can program at a much higher layer of abstraction. At this level of abstraction, you need only to describe what the computer should do rather than directly specify how it should do it.

Of course, this abstraction comes with tradeoffs. When you rely on a compiler, you give up the nitty-gritty control of directly writing assembly. So also, when you rely on an LLM to generate code from your prompts, you loosen the reins that much more.

As my first project, I decided to experiment with a tool to help with email introductions. One of the most valuable things you can offer the people in your network is connections with each other. You’ve probably been on the receiving end of these sorts of emails. Someone thinks of you and sends an email to introduce you to someone else they know who might lead to a fruitful interaction.

The thoughtfulness comes not in formatting and the actual writing of the email, but in the consideration of the people involved. But at some level, the ability to make connections is limited by the time it takes to draft the email template. A good intro email includes information about both people, such as their role and organization, a link to their LinkedIn profile, and a bit of context for why you’re making the connection. Then, all of that needs to be packaged into an email draft.

Most of this process is pretty tedious. Lots of copy and paste. And even worse, this information is often already stored somewhere in a database of contact information.

And so, this week I set out to vibe code a small webapp that will help me compose these introduction emails. For my first project, I decided to test out lovable.dev, one of a few different platforms (Replit and Vercel are two other popular ones) that combine a chat interface with a live preview of your code. For the techies among us, Lovable is built on top of a React/Typescript and also has built-in integration with Supabase for a backend database. It also has information about various other APIs for other services like Airtable and Google apps in its context, so it can easily write code to interact with them as well.

In the end, after just about a day or so of work, I have a fully working web app. It connects to an Airtable database and syncs in names, emails, and LinkedIn URLs, and uses that information to generate a template intro email. It stores user profile information and hashed passwords in its own database hosted on Supbase. It connects with Gmail via Google OAuth to directly save emails to your drafts.

As the user, you start by selecting two people from the database and adding a personal note to add context to the connection. Then the app builds a draft email, nicely formatted with URLs that you can preview and edit. Then you save the draft directly to your Gmail drafts folder with pre-populated address and subject fields.

So what?

To be honest, I was impressed by the experience of building this app over the last week. It was one of the most fun and engaging experiences I've had programming in a while. I am certainly no software engineer, but using Lovable’s LLM-powered chat, I was able to get to a working prototype that solves a real pain point.

To be sure, there were bumps along the way. While most of the time the generated code worked smoothly, there were times when the vibe stalled. The conventional advice when encountering an error while vibe coding is just to feed it back into the LLM with an instruction like “fix this bug.” Sometimes this works, but a few times along the way I had to get the LLM unstuck with my own programming know how (the most obvious example was when it kept running into roadblocks with the Gmail integration until I figured out that I needed to turn on Gmail API access in the Google Console settings).

What I found most interesting was the way that building in this way sparked my curiosity for more. After building the app, I'm more interested in learning more about how the tech stack works and the nuts and bolts of how the integrations work, and the connection between the frontend and backend database. I'm even a bit more curious about SQL, believe it or not (co-invented by a Harvey Mudd Engineering alum, by the way). What vibe coding enables you to do is to get a full pass of a prototype without needing all the information to start. With a little bit of curiosity and determination, you can get to a pretty decent place and then iterate from there.

Intersections with Education

It’s getting late, and I’ve already gone on too long, but I wanted to end with a few early takeaways on what I think this all means for our work as educators.

Education should be fundamentally focused on forming humans, not providing technical training. The rapid evolution of software, as exemplified by the explosion of LLMs in recent years, is just one example of the fragility of technical expertise. The tools are always evolving. The disruption writ large across education in response to generative AI does more to expose the fragility of the transactional foundation that we've built than anything else. We need to re-center ourselves on the timeless and enduring task of cultivating character and building wisdom.

The skills needed to effectively use LLMs have significant overlap with the skills needed to use any other new technology well (e.g., Google, the word processor). In other words, LLMs do not make good pedagogy obsolete. (They do, however, pretty quickly undercut assignments and other activities that lean into transactional and extrinsic motivation.) LLMs, as a new way to interact with information, make it all the more important that we understand how to think deeply and carefully about ideas. Of course, there is much to be said (on another day) about the competing objectives here, as there are many ways that LLMs can degrade the exact skills that are necessary to use them well.

LLMs and generative AI will continue to shape the world around us as Software 3.0 continues to permeate. In order to grapple with the direction we are heading, we will need leaders who are staying on the cutting edge of the technical capabilities while simultaneously continuing to reflect and articulate the broader societal context within which these tools should or should not be used. Perhaps this sounds a bit like what we're trying to do at Harvey Mudd. We can (and we must) walk and chew gum at the same time. The stakes are too high not to engage.

Got a thought? Leave a comment below.

Reading Recommendations

The always thoughtful

reflects on a distinctly different tack in his most recent post. tries to help us distinguish between the potential for AI to damage our brains versus our thinking.This podcast from The

with Michael Sandel is on my “to listen” list. The answer, of course, is no.The Book Nook

Another children’s book recommendation this week: Kermit the Hermit by Bill Peet. It’s a delightful story about the adventure of a somewhat miserly and greedy hermit crab who experiences and is shaped by generosity.

The Professor Is In

Week eight of ten of summer research is quickly slipping away. Exciting to see the continued progress and seeing my students begin to synthesize what they’ve been working on.

Leisure Line

Sporting my new straw hat from Costco in the pool. Hard to beat on a hot and sunny July afternoon in Southern California.

Still Life

A nice show and atmosphere at the Pomona track last Friday for fireworks.